The Problem

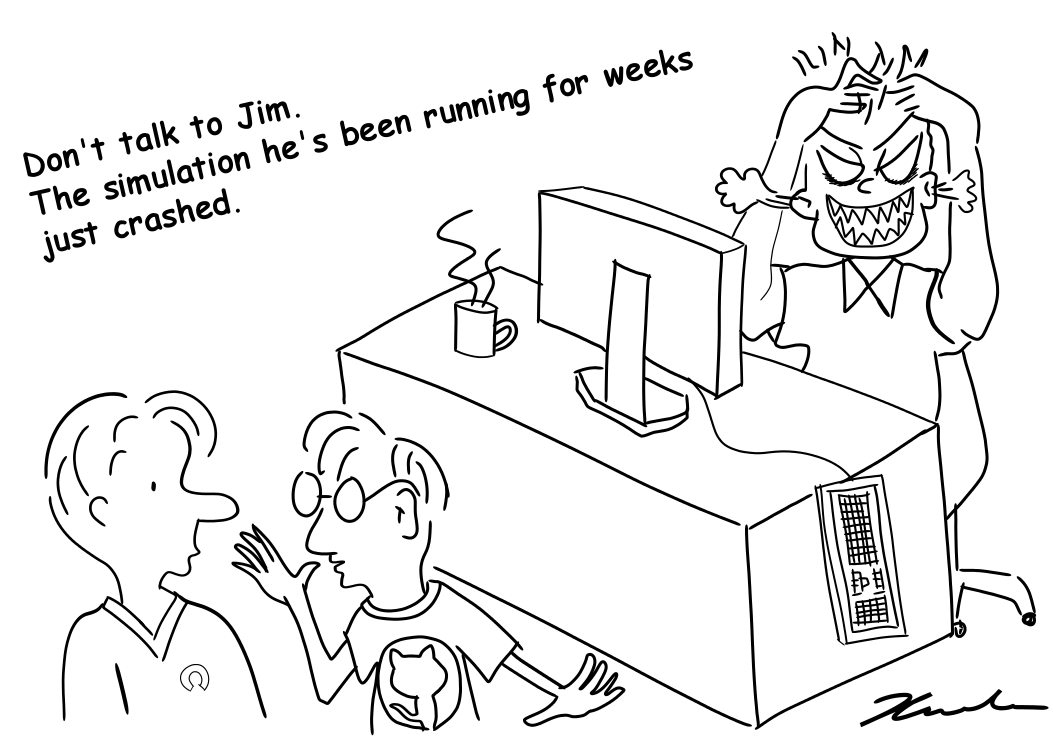

After testing out your newly developed software on a small dataset (which you always do, right?), you decide it is time to run a fairly large dataset that may give you a breakthrough in your research. Since all seems well after you submit the big job, you congratulate yourself and celebrate by reading a book, watching TV, or perhaps, reading more science papers while letting the simulation run for several days or weeks.

Several days later, one of the following happens:

- Someone uses up all the memory in the system

- There is a power failure

- Unplanned maintenance takes place

- A node goes down due to hardware failure

- An unhandled software fault occurs

When using large HPC systems, the last two items on the list are the most likely. The job will probably need to be restarted from scratch, or if you are using a script, it may be possible to alter it to start roughly from where you left off — still an expensive operation in terms of your time. Your deadline may not be met now, and you've potentially lost a lot of computing hours. If any of these have happened to you, then you have undoubtedly also wished there was a way to have prevented it. The good news is that C/R can almost certainly help with this problem.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)