Ways to Work with Compilers

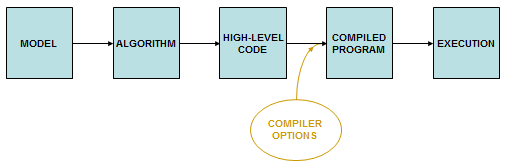

As mentioned earlier, it used to be the case that hand tuning improved on compiler optimization frequently enough that it was standard practice. But now, compiler-generated code generally outperforms hand-written assembly code. However, compilers are not (yet) so clever that they need no guidance from the human programmer. If you have some understanding of what the compiler is trying to do, you can try to help it "do the right thing". One way to guide the compiler is through the various command-line options that are available.

The bulk of this section is therefore a straightforward look at which compiler flags might be good to use. It is important to note that whether or not a particular compiler flag is "good" might well depend on the system on which it is being applied, as well as the version of the software in effect. Bear in mind, too, that certain compiler options are meant to be architecture-specific; a set of flags that were indispensable for one generation of microprocessors might be a real hindrance for the next. So take a hard look at the makefiles that were distributed with your favorite piece of software—you may find that the default compiler flags are in serious need of updating.

You may find that you'll want to give the compiler a little extra help in places. For example, most of the recent compilers (including GCC 12 and later) will attempt to

vectorize

loops in your code if you just give it the basic optimization option "-O2" (which is the default for Intel compilers, but not GCC). But you can

also have it issue a vectorization report, indicating which loops took advantage of

SIMD

registers for faster

floating point calculations. If you observe that vectorization is failing to occur in a critical area of

your code, you might want to assure the compiler that loop(s) can be maximally vectorized by inserting

compiler directives

or pragmas at the

beginning of the loop(s) in question. One possible method is to use OpenMP 4.0 syntax such as

#pragma omp simd (in C/C++) to guide the compiler.

We can go a step further with this example. It turns out that vectorization is especially effective when

arrays are aligned on

particular boundaries in memory. The compiler will often align your arrays for you automatically, but

again, it may need help in places. You can choose to enforce correct alignment for all arrays through a

compiler option, or do it just for certain arrays through special language-specific syntax. Perhaps you

will even want to pad out a struct with unused data to ensure that an array within the struct begins on

an address that is a large enough multiple of 4. (With Intel

Xeon Phi and Skylake,

64-byte alignment is often optimal.) If you are certain that the arrays that appear in a particular loop

are all properly aligned for easy vectorization, you can communicate that fact to the compiler with

#pragma omp simd aligned(). More information can be found in the

OpenMP and Vectorization roadmaps.

On the other hand, you don't want to try too hard to second-guess the compiler, either. Let's give a different example to illustrate when tweaking your source code becomes counterproductive. The old AMD 10h and 12h Optimization Guide shows several ways of writing a loop for summing three arrays in C, starting with the most straightforward expression,

You might try to optimize it with the assembly equivalent of

...but this may actually be slower than the shorter version which indexes into the arrays every time:

While it is true that increments to a pointer can be done faster than a multiplication and several adds, it turns out that having more instructions in the inner loop takes up too much decode bandwidth in the core. At some point you start to realize you don't need (or want) to know this distinction, and you should just let the compiler handle it. There is such a thing as trying to be too clever.

In the spirit of limiting ourselves to "rough tuning", we do not cover (beyond a brief mention here)

advanced techniques that involve making platform-specific or compiler-specific alterations

to your source code. For instance, there are very direct ways to enforce the

desired memory alignment in the vectorization example above. You might consider making use of special "malloc"

functions in Linux, such as posix_memalign() or aligned_alloc(). Equivalently, most

Linux compilers let you declare arrays with a decoration, such as __attribute__((aligned(64)))

or (for C++11 and later) alignas(64). Obviously this gives you quite a lot of influence over how

the code is ultimately compiled, but it may come at some cost in portability.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)