Is My Code Suitable for GPUs?

Before you migrate your application to run on a partition of an HPC cluster featuring lots of GPUs, it's a good idea to consider if your application is truly well positioned to run faster on this type of architecture. Migrating your code can be easy or hard. But some kinds of codes are unlikely ever to realize the full benefits of GPU computing, even after extensive efforts at performance optimization. Here's what to look for in the computationally heavy parts of your application to indicate that migration will be worth the effort.

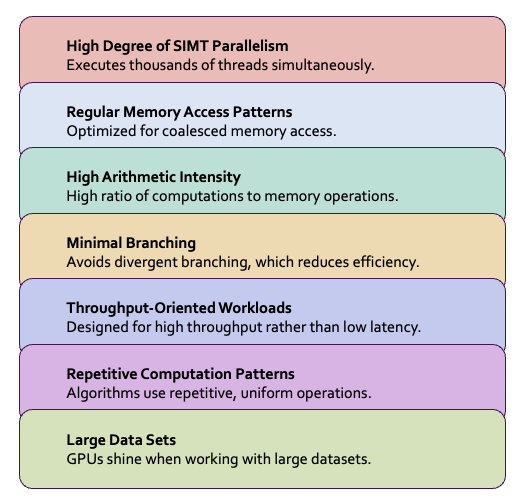

Computational Characteristics of GPU-Friendly Applications

The characteristics of codes that run well on GPUs are very similar to those of codes that vectorize well on CPUs.

- High Degree of SIMT Parallelism: GPUs excel at executing Single Instructions on Multiple Threads (SIMT). They are ideal for data-parallel workloads requiring thousands of parallel, independent parallel threads.

- Regular, Compact Patterns of Memory Access: The GPU's SIMT computations go fastest when memory accesses are coalesced, i.e., when contiguous groups of threads access contiguous memory locations.

- High Arithmetic Intensity: GPUs are most efficient when there is a high ratio of computations to memory operations. They are especially well suited for dense linear algebra, such as matrix-matrix computations.

- Minimal Branching: Branches are inefficient in SIMT execution because "if" and "else" clauses cannot execute simultaneously on different threads.

- Throughput-Oriented Workloads: GPUs have limited cache space; they are designed for high throughput rather than low latency. Therefore, they perform better when processing large volumes of data.

- Repetitive Computation Patterns: Algorithms with repetitive, uniform operations map well to GPU architectures. Think in terms of "parallel for" loops.

- Large Data Sets: GPUs shine when working with large datasets that can be processed concurrently.

It's no coincidence that much of this list resembles a checklist for enabling auto-vectorization on CPUs. As explained in Understanding GPU Architecture, one thread in a GPU is not equivalent to one thread in a CPU. Instead, it's analogous to one lane of a vector unit in a CPU (which is where SIMD processing takes place—Single Instructions on Multiple Data).

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)