What is MPI?

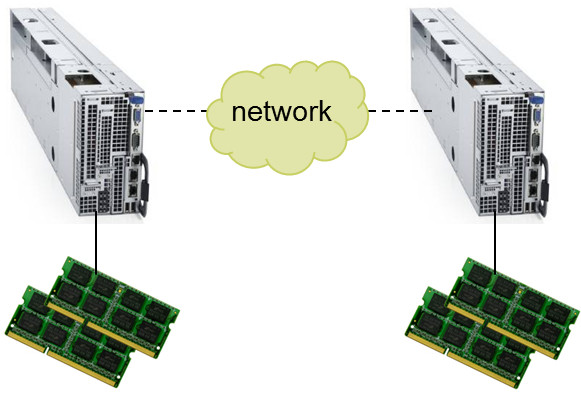

MPI, which stands for Message Passing Interface, is a specification for a standardized and portable interface to a library capable of supporting communications and related operations that are useful for distributed memory programming. Distributed memory programming is characterized by the fact that processes cannot directly see each other's data. This means that a library is needed to effect such communication through explicit send and receive calls. MPI is a standard, now over 25 years old, that unified a number of earlier designs for distributed memory communication. MPI is not a library implementation by itself, but rather, there are many available implementations that will be discussed later in this tutorial. In the MPI model, communication among the processes in nodes on a distributed memory system is accomplished within a parallel program by passing messages between them, as facilitated by MPI's standardized function and subroutine calls. Interfaces to the MPI routines are defined for multiple languages, including C, C++, and Fortran.

Because MPI prescribes only the behavior of a compatible message-passing library, not its underlying details, there isn't just one unique MPI library that everyone always uses. Instead, different MPI implementations are able to achieve superior performance on different systems, possibly by taking advantage of specialized network topologies or communication protocols. This is why MPI libraries were among the first message-passing libraries to offer good performance and functionality without sacrificing a common interface that is standard and portable across systems.

What it Offers

MPI offers the following in a number of high quality implementations:

Standardization

MPI is standardized on many levels. For example, you can rely on your MPI code to compile and link for any MPI implementation running on your architecture, and your MPI calls should behave the same regardless of which library is linked. Performance, however, is not standard for different implementations.

Portability

MPI allows you to write portable programs that take advantage of the specific hardware and software provided by vendors because implementors can tune the MPI calls to the underlying hardware and software environment.

Performance

MPI is the primary environment for parallel programming on the majority of distributed memory systems because it has shown higher performance than a number of other environments. There are a few alternatives (such as Charm++, HPX, GlobalArrays, and UPC), but none of these are in wide usage.

Richness

MPI provides asynchronous communication, efficient message buffer management, efficient groups, and rich functionality. It includes a large set of collective communication operations, virtual topologies, and different communication modes, and MPI supports third-party libraries and heterogeneous networks as well.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)