Six Basic MPI Routines

Another way to think about the six basic MPI routines is as functional pairs. Each code block below shows the same program with a different functional pair highlighted. Don't worry about the overall code right now; we'll look at it on the next page.

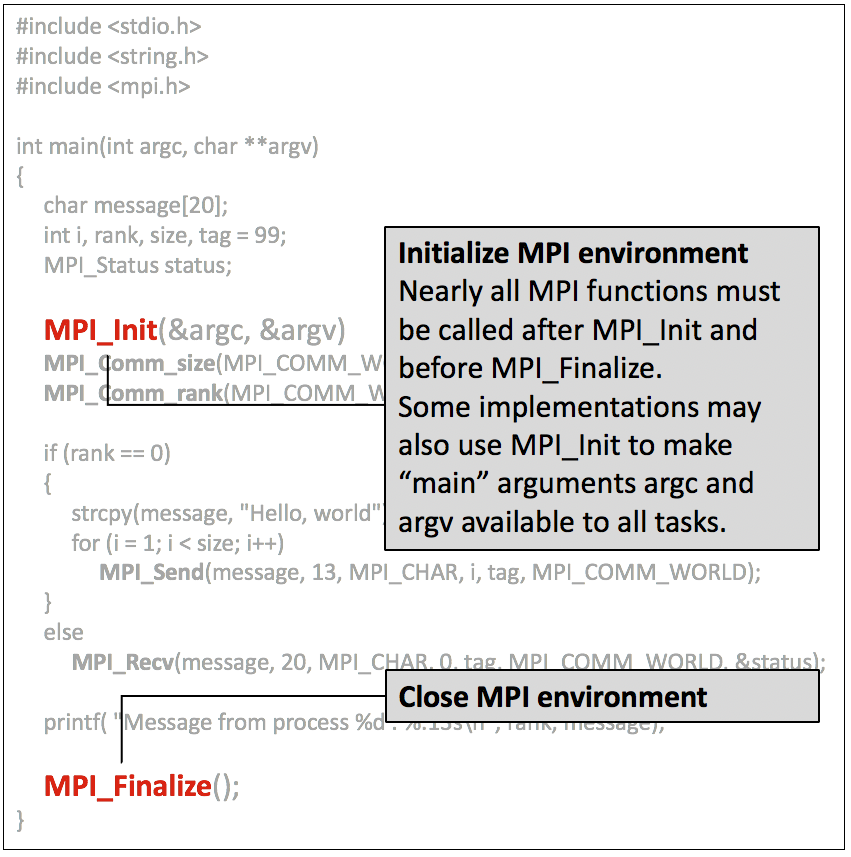

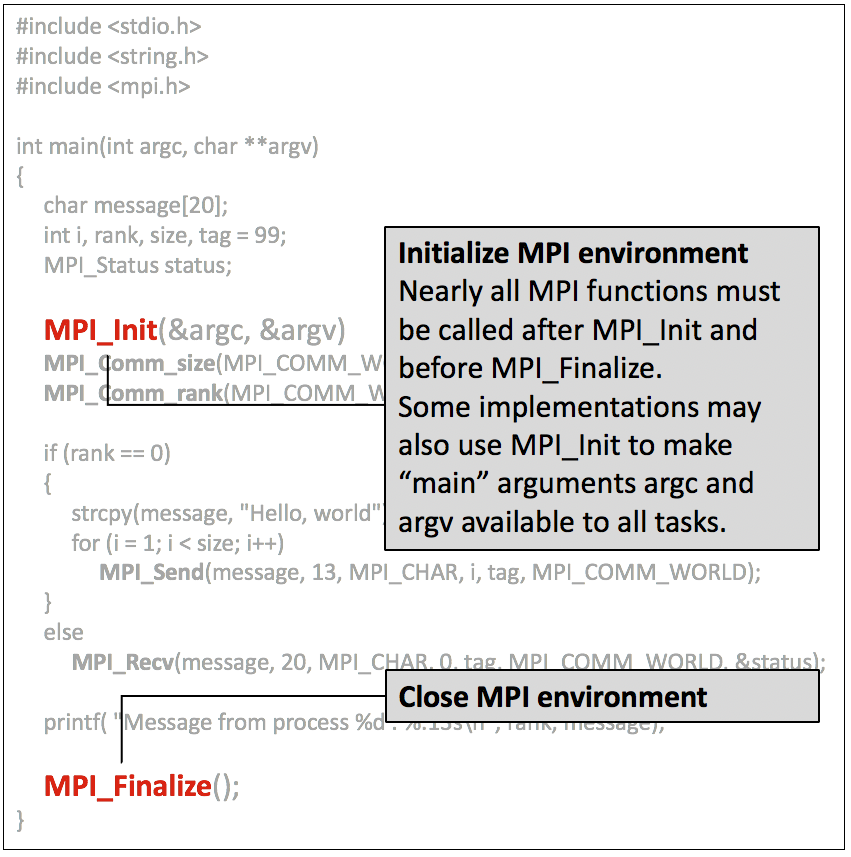

Initialize and Close the MPI Environment

Nearly all MPI functions must be called after MPI_Init and before MPI_Finalize. Some implementations may also use MPI_Init to make the argc and argv arguments from the main process available to all other processes.

MPI_Init and MPI_Finalize.

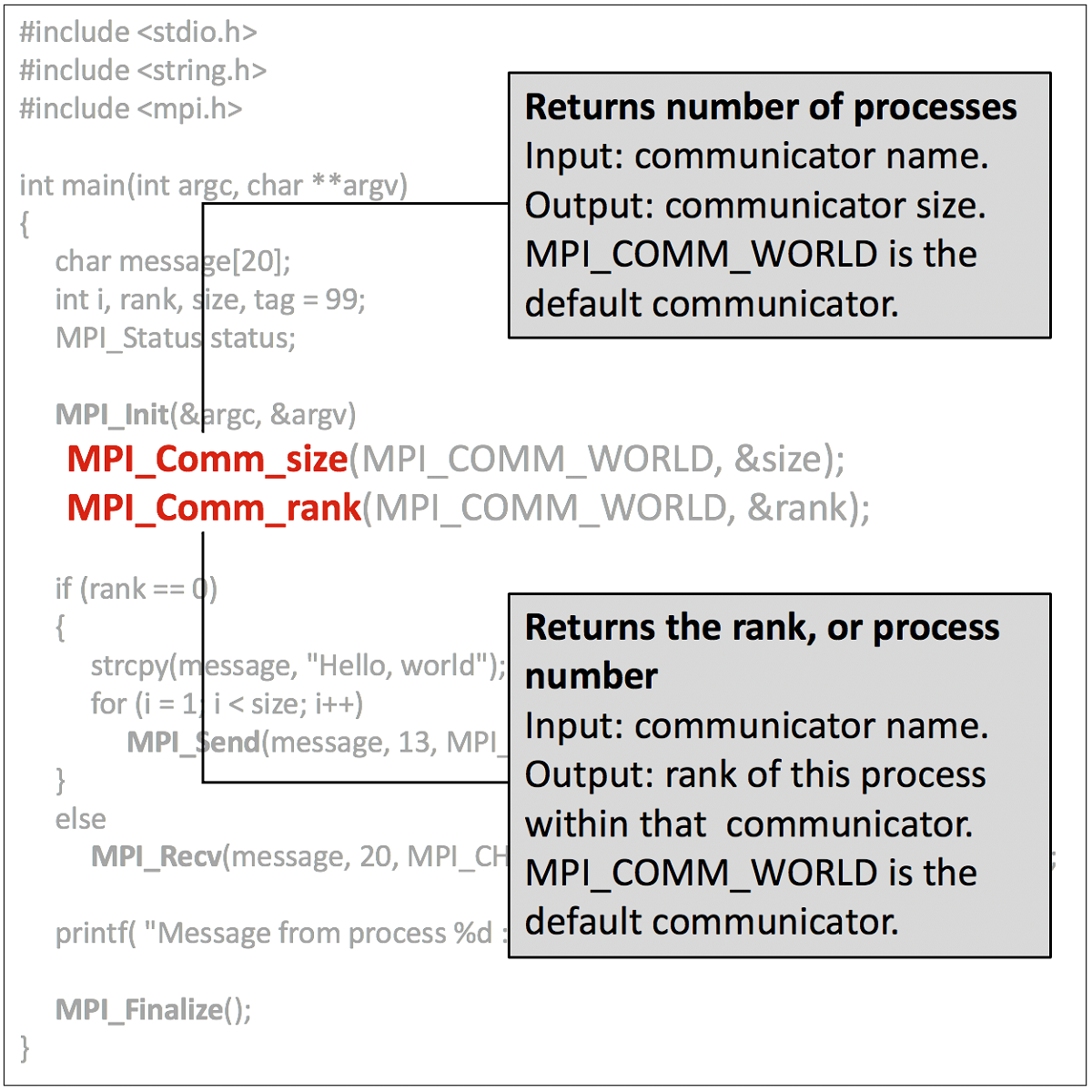

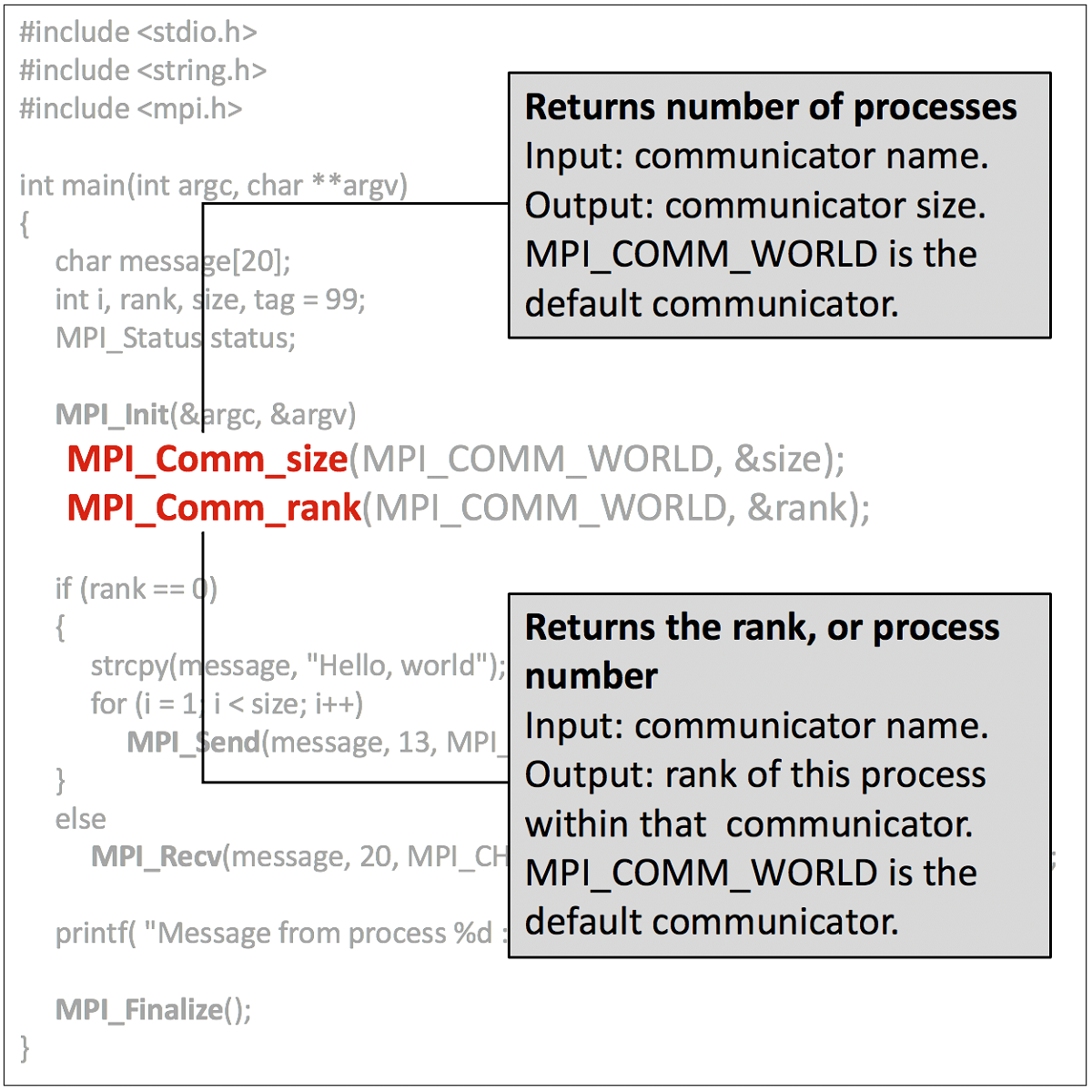

Query the size of the communicator task group and find this process's rank number

Use MPI_Comm_size to get the number of processes in the task group. MPI_Comm_size takes the communicator name as input and returns the communicator size as output. The default communicator, MPI_COMM_WORLD, contains all processes that belong to the program.

Use MPI_Comm_rank to find the rank, or process number, of the current process. Although each process in the MPI program runs the same program, each process will have a unique rank. This lets you control which code each process executes. MPI_Comm_rank takes the name of a communicator group and returns the rank of the calling process within that communicator.

MPI_Comm_size and MPI_Comm_rank, each process learns its own rank in the program so it can selectively run the code that corresponds to its rank.

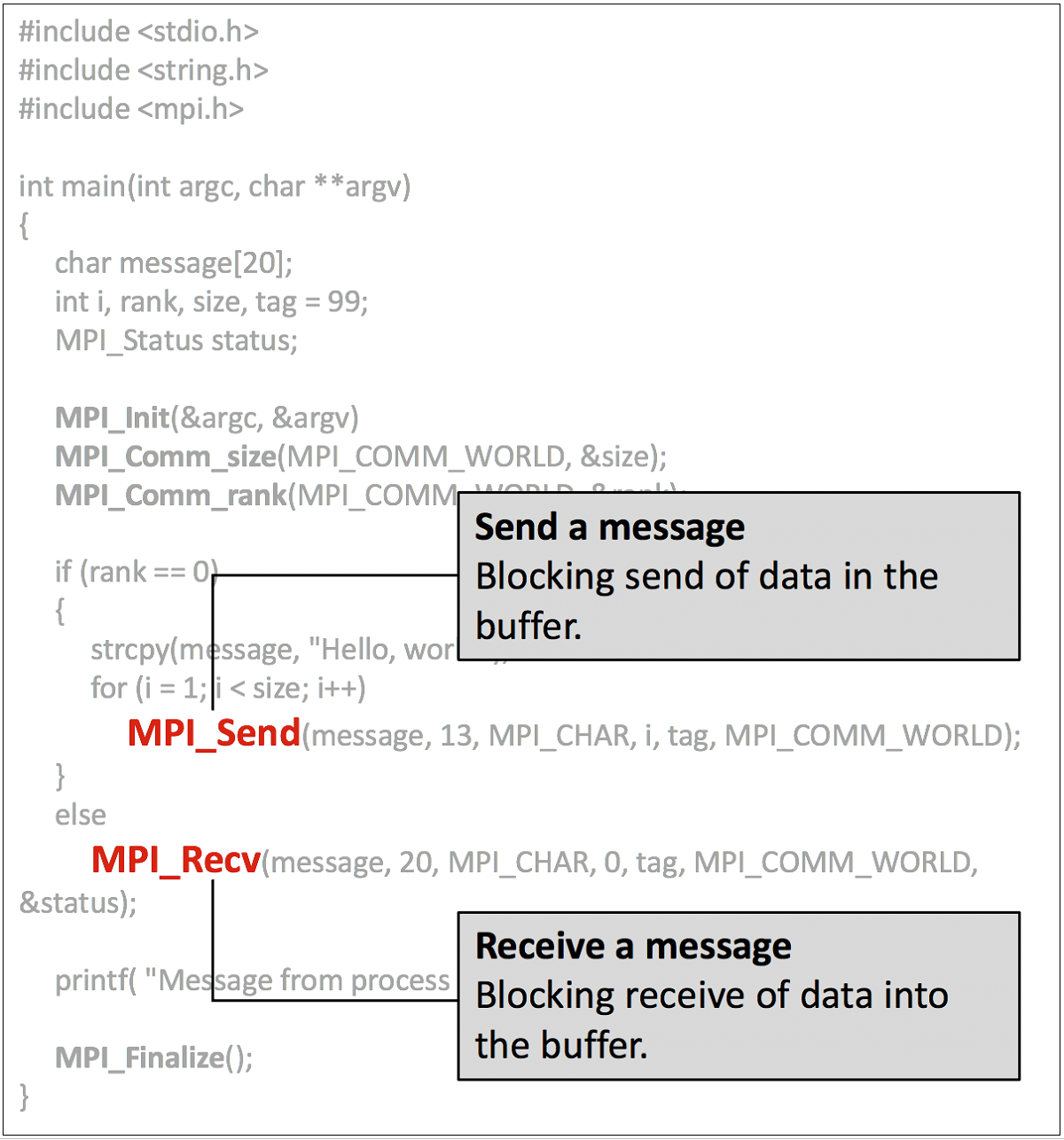

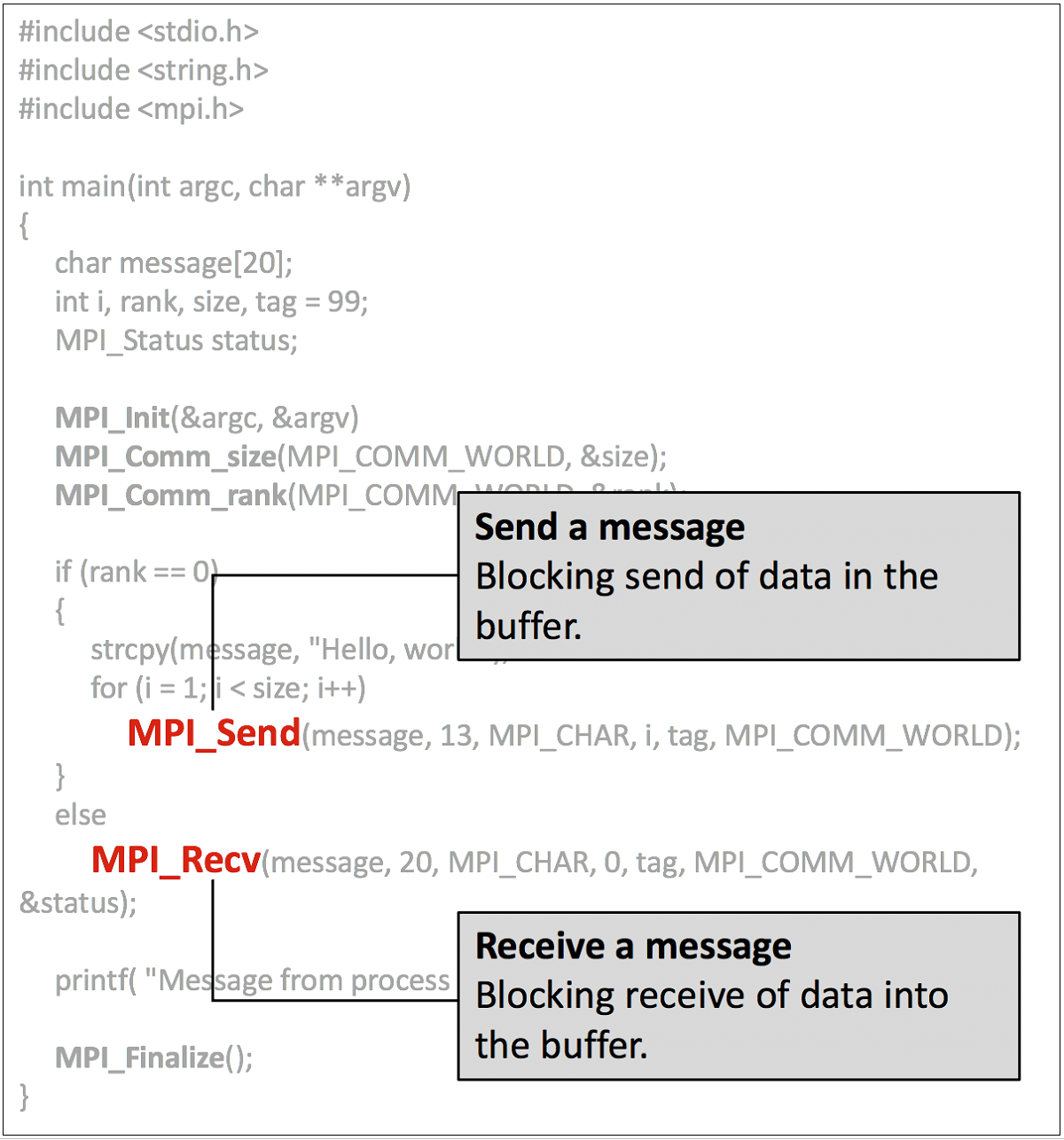

Send and Receive Messages

In MPI, processes communicate via messages. In this example, the process with rank 0 uses MPI_Send to send a message to each of the other processes. The other processes call MPI_Recv to access that message.

MPI_Send sends a message from the main process (rank 0) to each of the other processes. The rest of the processes use MPI_Recv to retrieve that message.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)