Motivation

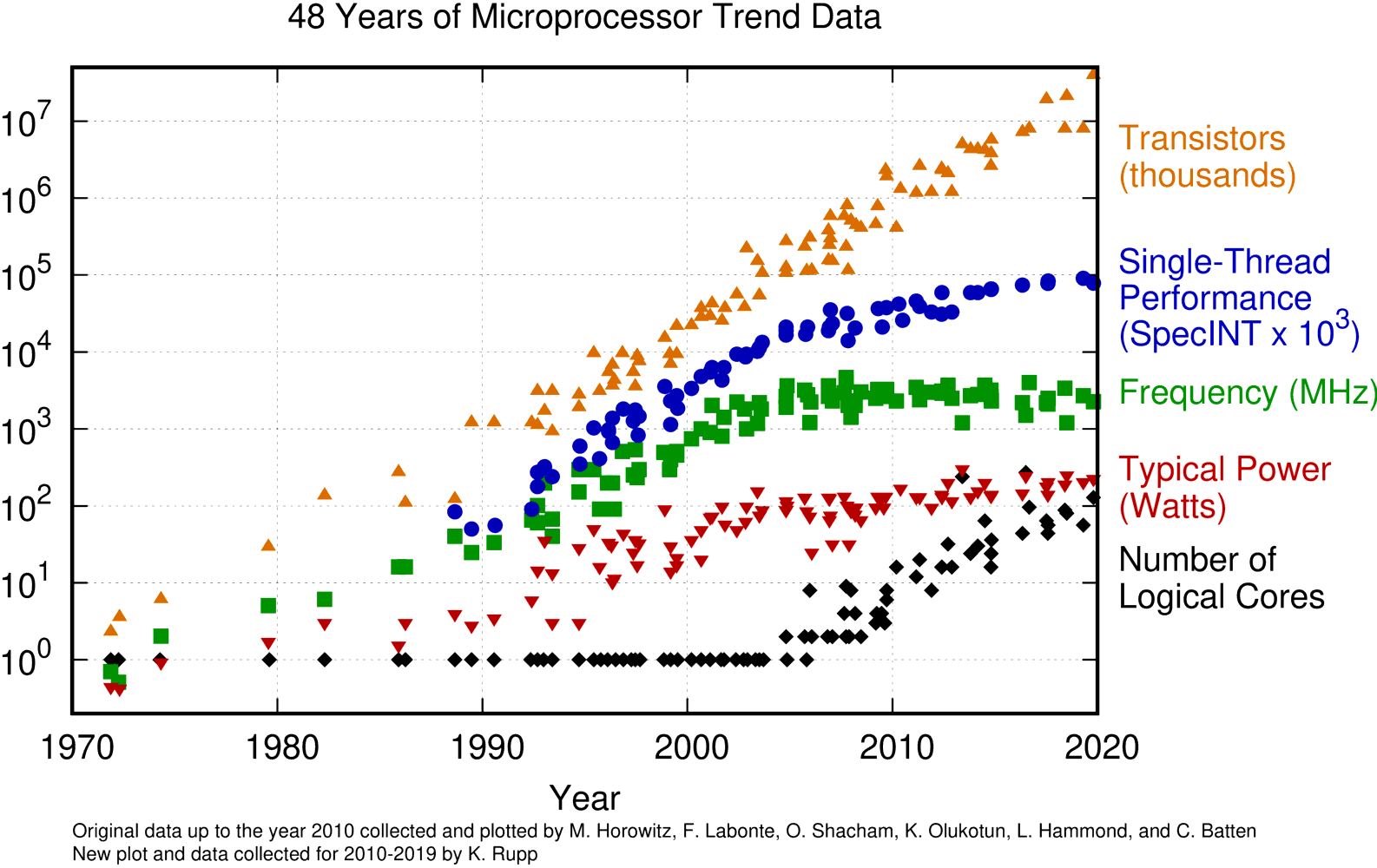

Nearly everyone who follows computer technology is familiar with Moore's Law, the observation that the density of transistors on computer chips tends to double every 18-24 months. The end of Moore's Law has been predicted for a long while, yet surprisingly, the trend has continued. However, over the past decade or two, the way in which Moore's Law has shown up in processors has changed: CPU frequency is no longer increasing at a similar pace. In fact, today's CPUs are no faster in GHz than they were 15 years ago. Instead, as the diagram below shows, the extra density of transistors has mostly resulted in more and more cores being added to processors.

Why did this change take place? The answer turns out to be that even though the exponential scaling of Moore's Law persists, a different trend called Dennard scaling has broken down. In a seminal 1974 paper, Dennard et al. showed that if MOSFET transistors in a semiconductor device shrink by a factor k in each dimension, then the voltage V and current I required by each circuit element can also be made to decrease by k. This reduces each element's power consumption (VI) by k2. But the number of these elements per unit area increases by k2, so the power dissipated per unit area stays the same. This means the power and cooling constraints of the overall device remain unaltered. Yet these scaled-down elements can operate at higher frequency, because the delay time TRC per circuit element is reduced by k. (Basic physics: per element, the resistance R=V/I is unchanged, while the capacitance C depends on area/distance and shrinks by k.) Unfortunately, starting around 2005, this favorable Dennard scaling began to run into trouble due to leakage currents that develop at super-small dimensions. As a result, even though transistors are still shrinking in size, the frequency cannot be made to go higher.

Accordingly, in 2005, the first dual-core Intel chips arrived—and from there, the core counts have just kept growing. To cite a couple of recent examples, the Intel Xeon "Ice Lake" processors in TACC's Stampede3 possess 40 cores (the max for that model), while AMD EPYC "Milan" processors from around the same period have up to 64.

A closer look at the above diagram shows that the increase in core counts doesn't tell the whole story. Another trend has been building as well: single-thread performance has been on the rise, albeit more slowly. There are various reasons for this, but the main one is the growth of SIMD or vector operations. SIMD instructions first appeared on Intel Pentium with MMX in 1996, and since then, vectors have ballooned in size. The latest SIMD extensions can operate on 512 bits (16 floats) at a time, in Intel Xeon Scalable Processors.

The ultimate question we are trying to answer in our Vectorization roadmap is simply this: can vectorization really increase computational speeds by an order of magnitude? Exactly how important is this type of parallelism, in modern processors? (At the very least, it seems it can't be neglected!)

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)