System Architecture

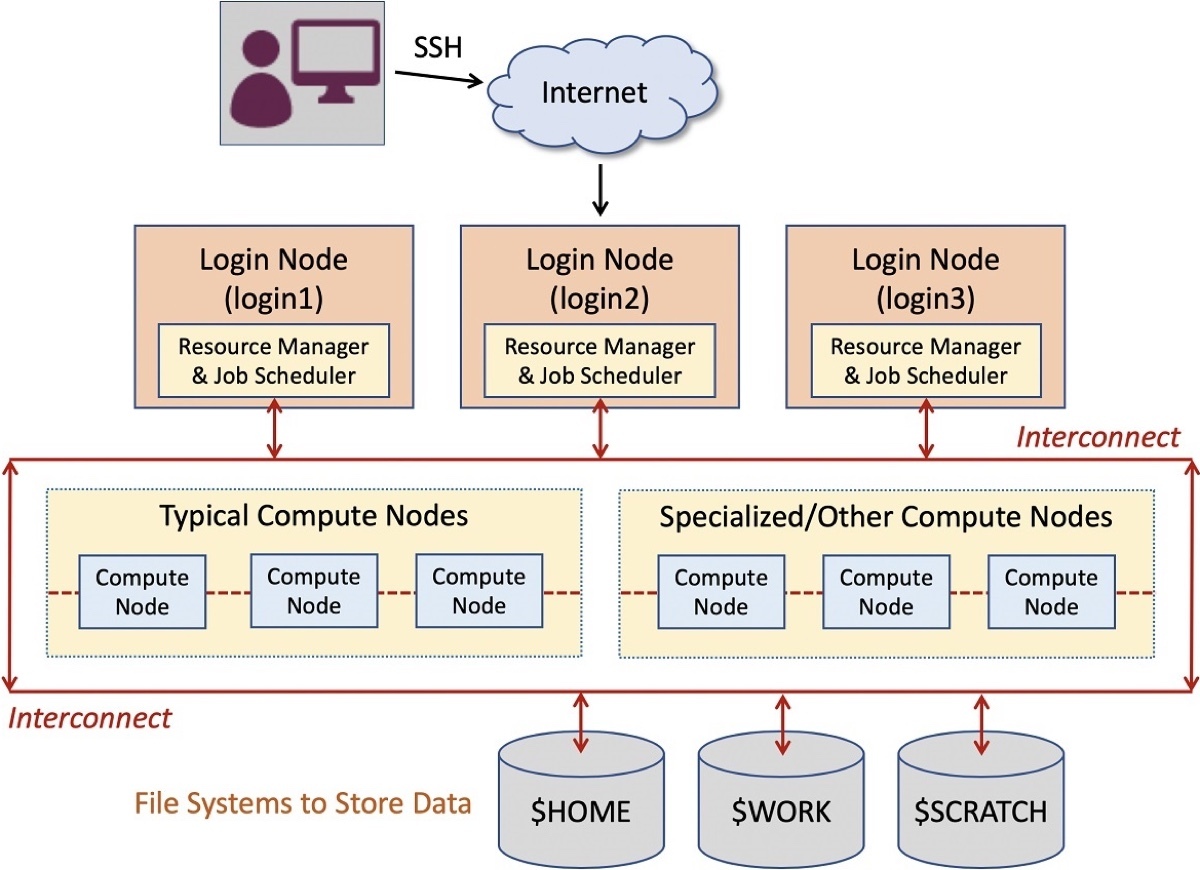

On Frontera, the login nodes provide access to the system for compiling, job submission, data transfer, etc. While the login nodes are configured similarly to the compute nodes, they are not intended to be used for computational jobs. Instead, they serve as your gateway to the rest of the system, as shown in the diagram below.

The extensive array of compute nodes is intended for running large-scale jobs in batch or interactive mode. Each node has two 28-core Intel Xeon Platinum 8280 "Cascade Lake" chips (often abbreviated as "CLX" by TACC), for 56 total cores in 2 sockets. We'll look at the full details of these nodes shortly (in Compute Nodes).

The other nodes offer specialized hardware for specific types of jobs. The large memory nodes, as the name implies, are intended for jobs requiring higher memory usage. Each of these nodes has four 28-core Intel Xeon Platinum 8280M ("Cascade Lake") chips, for 112 total cores in 4 sockets. The nodes include 2.1 TB of Optane memory and 3.2 TB of extra local storage, and are otherwise similar to the compute nodes. The single-precision GPU nodes on Frontera each have 4 NVIDIA Quadro RTX 5000 cards and 2 Intel Xeon E5-2620 v4 ("Broadwell") CPUs.

The Mellanox InfiniBand interconnect ties all these components to each other and to the various high-performance storage systems. Note that Frontera's main filesystems will be covered in the topic, Storing and Moving Data.