Incorporating databases into your workflow I

Relational databases are generally part of workflows. The various uses of the databases can impose different demands and performance may have to be tuned for specific use cases. Here we discuss a few common tips and tricks.

Cleaning Data

Relational databases will generally reject attempts to INSERT data in the wrong form, with critical data missing, with incorrect types or which it otherwise can't parse. This is good, because the database promises consistency and attempts to put in data which doesn't match the database schema should fail, but it can be frustrating when it happens. The data should be cleaned before loading is attempted, which often means that checks should be run on the source of the data. The basic issues to address are:

- Ensure data are present for each required column

- The data must be the right types for each column

- There must be a way to indicate missing values for non-required columns

- If you have a column which auto-generates an entry, such as an auto-incrementing column that is a surrogate key, there must not be data in it

- Any other constraints must be obeyed. This will most often be a matter of ensuring values in a foreign key column are present and valid

Getting foreign key values before or during loading

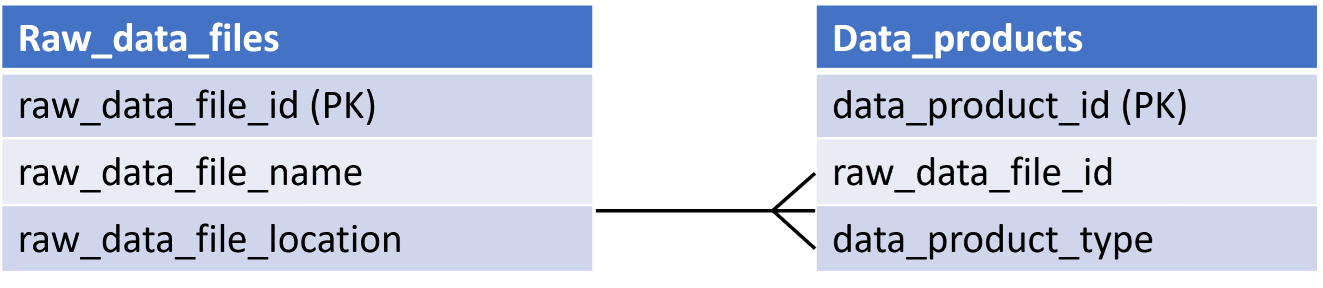

Foreign keys need to be inserted along with the rest of the data, but they may not be known in advance, particularly when the primary key of the foreign key table is a surrogate key, which has no semantic information.; instead, some other information from the key table may be known and must be used to get the foreign key. It's possible to get them with another query, which can be run first and then the data included in the main INSERT, or it can actually be included as a subquery in the INSERT. Consider the tables below:

If we know the filename <filename> and file location <file location> of the source raw data file—which isn't that unusual, it's often recorded in the process of making the data products, which here we assume have data_product_type <data product type>—then we can use that to get the raw_data_file_id with a subquery as we insert the data products. Assuming that the Data_Products table has an auto-incrementing primary key so that we don't have to INSERT it, this query should work:

Bulk loading of data

For INSERTing smallish numbers of rows, the standard INSERT command works fine, but for very large numbers of rows it can be very slow. Unfortunately, ANSI SQL doesn't contain a fast method for bulk loading data quickly, but each RDBMSs does, including loading from files of data. Each tool is different and must be researched separately, but for large loads—particularly periodic ones—it's often important to use such a tool.

Concurrence and dirty reads

Databases are often shared resources and while most of them handle multiple concurrent reads, it won't always be possible to control use so that no one is trying to read the database while INSERTS, UPDATES or DELETES—operations which change the database and may be rolled back if there is an issue during execution—are being done. By default, these operations will often lock those tables, preventing reads, so that users don't read data which subsequently get removed if the transaction fails and gets reversed; such a situation would be called a "dirty read". However, sometimes the consequences of a dirty read are not very serious. The mechanisms to allow this are different for each RDBMS, but if INSERT, UPDATE or DELETE operations are causing problems with the extent that they are blocking reads, you should consider allowing them if the effects won't be damaging.

Stored procedures

Stored procedures, often called "sprocs", are precompiled queries with placeholder variables for parameters, which can then be run as full queries when the input is provided. As they are precompiled, they can run a little faster so long as the database hasn't changed significantly since they were compiled, or they may have gone "stale" and should be recompiled. They also offer some security benefits, both in helping avoid SQL injection (see below) as well as allowing allocation of specific permissions to only execute the sproc, which is limited, rather than have the same level of access to the underlying tables.

SQL injection

If taking input from users to run queries, it might be tempting to concatenate the input into a string which is then run as a query. This is very dangerous, as it allows an attack called SQL injection, where the user input contains valid SQL, which then gets run against the database! Although in principle it may be possible to sanitise the input, checking that it can't be run as SQL, in practice it is better just not to construct queries this way. Options which may help include the use of stored procedures, or else using a programmatic framework which has database connection/query objects with bound parameters, so that the input itself is not executable.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)