Training

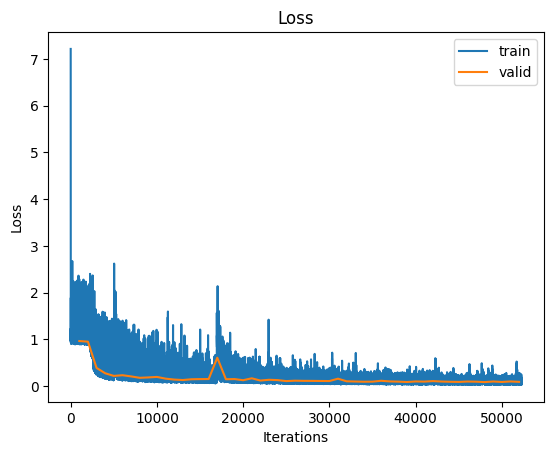

Before we start training the model, let's configure the hyperparameters! Since the accessible computation power is limited, we will only run 1 epoch of training, which takes about 1.5 hour. Consequently, we won't be able to produce as accurate results as shown in the original paper in this topic. Alternatively, we provide a checkpoint of training the model on the entire WaterDrop dataset for 5 epochs, which takes about 14 hours with a GeForce RTX 3080 Ti.

Below are some helper functions for evaluation.

Here is the main training loop!

Finally, let's load the dataset and train the model! It takes roughly 1.5 hour to run this block on TAP Jupyter notebook with the default parameters.

Load the checkpoint trained by us. Do not run this block if you have trained your model in the previous block.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)