Interconnects

There are really multiple kinds of interconnects in an HPC system like Frontera or Stampede3. One tends to think immediately of the large-scale network fabric that connects all the nodes together. However, interconnects exist within each node, too, in several different ways.

- The individual cores in each processor are connected by a 2D mesh, so that each core's L3 cache can be shared with the other cores in the same processor, creating a larger effective L3.

- The two processors in each node are connected by Intel's Ultra Path Interconnect (UPI); this is how the shared last level cache (LLC) described on the previous page is able to bridge between the two processors' separate L3 caches.

- The node's UPI also gives each processor direct access to the memory that is attached to the other processor's socket.

The above mechanisms effectively expand the amount of cache and memory available to any one core within a node. However, the speed of access becomes nonuniform, especially between sockets. Yet that nonuniformity is almost negligible compared to the bigger problem of moving data efficiently between different nodes.

Network Interconnects

On Frontera and Stampede3, as on most advanced clusters, the main role of network interconnects is to enable inter-node communication between MPI processes at a very large scale. On Stampede3, this is accomplished using the Intel Omni-Path Architecture (OPA), which provides connectivity for the SKX, ICX, and SPR-based nodes on that system. OPA was introduced by Intel in 2017 and was prviously deployed in the former Stampede2 for its KNL and SKX nodes, but further development was discontinued by Intel in 2020 and taken over by Cornelis Networks. Thus, on Frontera, the network interconnect relies on Mellanox HDR technology, while Stampede3 receives its OPA upgrades from Cornelis. Some of the specifications for the Omni-Path and Mellanox HDR interconnects on the TACC systems are summarized below.

On both Frontera and Stampede3, all network traffic to, from, and between nodes is mediated by a huge packet-switching network. In addition to supporting inter-node communication, the switch connects all the compute nodes to the $HOME and $SCRATCH filesystems on each cluster. Each cluster's interconnect also provides access to the cross-cluster $STOCKYARD filesystem with its cluster-specific$WORK subdirectories.

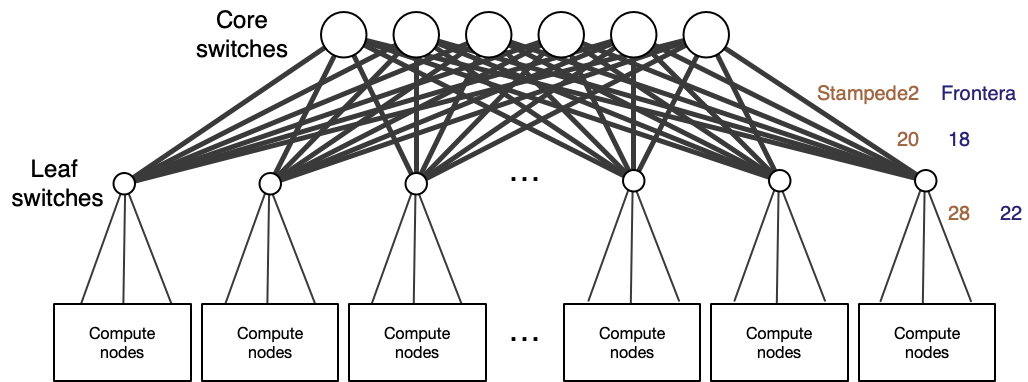

Both Frontera and Stampede3 use a network based on a fat tree topology, with a set of core switches connected to a set of leaf switches, each of which is connected in turn to a group of compute nodes. A schematic view of such a topology is shown in the figure below.

Stampede3: The interconnect is a 100Gb/sec Intel Omni-Path (OPA) network with a fat tree topology employing six core switches. For just the SKX nodes (denoted "Stampede2" in the diagram), there is one leaf switch for each 28-node half rack, each with 20 leaf-to-core uplinks, resulting in a 28/20 oversubscription. The ICX and SPR-based nodes are provided with full fat-tree interconnects that are not oversubscribed.

Frontera: The interconnect is based on Mellanox HDR technology with full HDR (200 Gb/s) connectivity between the switches and HDR100 (100 Gb/s) connectivity to the compute nodes. The fat tree topology employs six core switches. There are two 40-port leaf switches in each rack, each connected to half of the rack: 44 of the 88 nodes in each rack connect to 22 downlinks of a leaf switch as pairs of HDR100 (100 Gb/s) links into HDR200 (200 Gb/s) ports of the leaf switch. The other 18 ports are uplinks to the six core switches. This results in an oversubscription of 22/18.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)