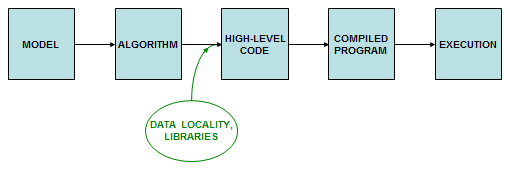

Data Locality Matters

Data locality is often the single most important issue to address for improving per-core performance. As we've seen, any processor is likely to have a memory hierarchy like that in an Intel Xeon Scalable Processor, which features four levels: L1 cache (the fastest), then L2, then L3, then main memory. But during a computation, where in this hierarchy will the processor actually find the data that are needed at any given moment? The answer clearly has a tremendous impact on how quickly the processor generates the next result. With that in mind, we examine some of the data locality considerations that arise when writing code.

The best outcome would clearly be to find our data in L1 cache every time they are needed. This aim would be nearly impossible to achieve were it not for the important fact that data items are not brought into L1 in isolation. Instead, they are fetched as part of a larger unit called a cache line, which in current Intel processors is 64 bytes long. In effect, main memory is divided up in to 64-byte units beginning at particular addresses; each cache line moves through the hierarchy as a unit. We can therefore get 16 floats (or 8 doubles) for the cost of fetching just one. It makes good sense to try to use all 16 (or 8) items if we can, because we know they will be instantly found in L1 cache.

Putting this knowledge together with the other aspects of microarchitecture we've learned so far, we come up with a list like this:

Principles of good data locality

-

Code accesses contiguous, stride-one memory addresses

- Reason: data are always fetched in cache lines which include neighbors

- Reason: inner loops are instantly vectorizable via SSE, AVX, or AVX-512

-

Code emphasizes cache reuse

- Reason: when multiple operations on a data item are grouped together, the item remains in cache, where access is much faster than RAM

-

Data are aligned

on important boundaries (e.g., doublewords)

- Reason: items don't straddle boundaries, so efficient one-shot access is possible

- Reason: it is a precondition for fetching data as a single vector, single cache line, etc.

Goal: do all the work you can on well-aligned data while they are in cache, so that deeper levels of the memory hierarchy are accessed infrequently.

We discuss these principles more in the following pages, but to elaborate on the top one briefly: it is doubly beneficial to access tightly packed (i.e., contiguous and stride-one) data. First, it obviously reduces the number of slow fetches from memory to cache. Second, it better allows the compiler to exploit the processor's SIMD instruction set, which is easiest to apply when the code is stepping through consecutive locations in memory. The compiler will have a much harder time vectorizing loops with a stride greater than one, especially if the big stride occurs in inner loops.

Data locality becomes even more important for accelerators (e.g., GPUs) than it is for host CPUs, simply because every item on the above list becomes individually more critical to performance. A fetch from main memory to a GPU cache is usually slower than it would be for a CPU fetch, and cache sizes are smaller than on CPUs. Furthermore, accelerators like GPUs tend to rely more heavily on SIMD operation for performance, so while each device is different, it is fairly crucial to adhere to the principle of arranging data into boundary-aligned sets of contiguous vectors when using GPUs. For more details related to GPUs, see Understanding GPU Architecture.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)