Multithreaded MPI Calls

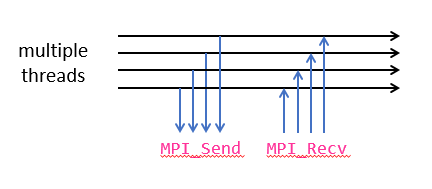

At the highest level of thread support, MPI_THREAD_MULTIPLE, all the threads in thread-parallel regions of a hybrid code are able to send messages to one another, simultaneously. However, some special techniques may be required to make sure that these messages get handled correctly. The code excerpt below gives one example of how to do this. It will be expanded upon in the exercise to follow.

all threads may call MPI concurrently, no restrictions

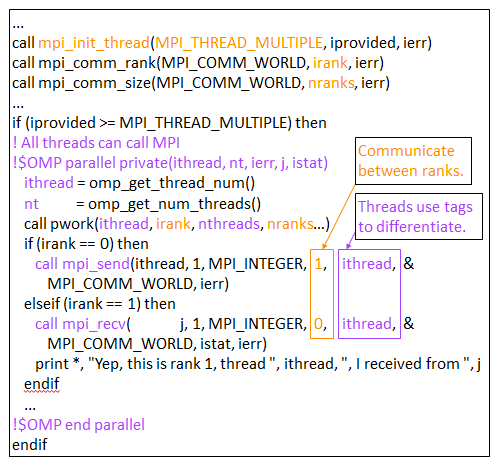

Notes on this program:

- Thread ID as well as rank can be used in communication

- Technique is illustrated in the above multithreaded "ping" (send/receive) example

- A total of

nthreadsmessages are sent/received between two MPI ranks - From the sending process: each thread sends a message tagged with its thread number

- Recall that an MPI message can be received only by an MPI_Recv with a matching tag

- At the receiving process: each thread looks for a message tagged with its thread number

- Therefore, communication occurs pairwise, between threads whose numbers match

The above example illustrates the use of point-to-point communication among threads. But special attention must be given if multiple threads will be using MPI collective communications. According to the MPI standard: "In these situations, it is the user's responsibility to ensure that the same communicator is not used concurrently by two different collective communication calls at the same process." This means that MPI_Comm_dup() must be invoked to make copies of MPI_COMM_WORLD if more than one thread will be using that communicator concurrently in the same process.

The above example illustrates the use of point-to-point communication among threads. But special attention must be given if threads will be using MPI collective communications, such as calls to MPI_Reduce(). According to the MPI standard: "In these situations, it is the user's responsibility to ensure that the same communicator is not used concurrently by two different collective communication calls at the same process." This means that MPI_Comm_dup() must be invoked to make copies of MPI_COMM_WORLD if more than one thread will be using that communicator concurrently in the same process.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)