RAM Arrangement in HPC Clusters

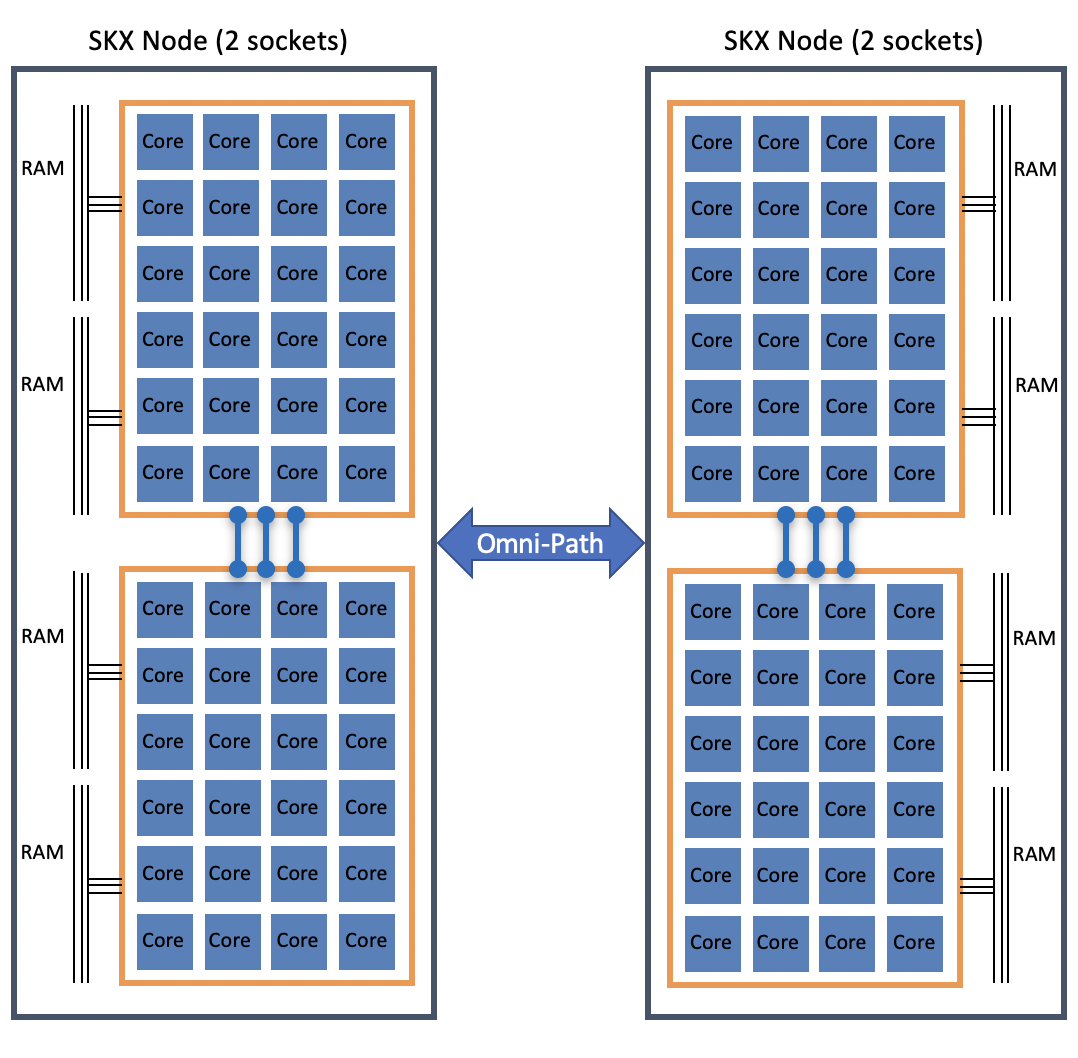

The basic way the memory is laid out in Stampede3 at TACC follows a typical pattern for HPC clusters, which may be summarized and illustrated as follows:

-

The cluster memory is separated between nodes, so it is distributed memory

- Memory is local to each node and is not directly addressable from other nodes

- To share data, message passing over a network is required

- This is symbolized by the arrow that connects two nodes in the figure below

-

Within a single node, memory spans all cores on a node, so it is

shared memory

- A node's full local memory is addressable from any core in the node

- This is indicated by the black border that surrounds each 48-core Skylake ("SKX") node in the figure

Even within the nodes, further architectural details result in non-uniform memory access (NUMA). Again taking Stampede3 as an example:

-

Typically two sockets per node

- Each node has two sockets, to hold two Intel Xeon "Skylake" processors (e.g.)

- Sockets are indicated by the orange outlines in the above figure

-

Multiple cores per socket

- Each Skylake socket (processor) has 24 cores

- These are depicted as small blue boxes in the figure

-

Memory is attached to sockets

- Cores sharing the same socket have fastest access to its attached memory

- In the figure, memory modules and channels are illustrated by thin black lines

For simplicity, most of the diagrams on the ensuing pages show a 2-socket Xeon Skylake node as a model, making the figures easier to follow (which wouldn't be the case if we drew all the cores of some other processors!). But for completeness, here is a rundown of all the types of nodes currently found in Stampede3 and Frontera.

Stampede3 is a very large HPC cluster composed of 1,864 compute nodes, of multiple types (which have some notable differences):

- SKX - 1,060 dual-processor Intel Xeon Platinum 8160 "Skylake" nodes, each having 48 total cores and 192GB DDR4 RAM

- ICX - 224 dual-processor Intel Xeon Platinum 8380 "Ice Lake" nodes, each with 80 total cores and 256GB DDR4 RAM

- SPR - 560 dual-processor Intel Xeon Max 9480 "Sapphire Rapids HBM" nodes, each having 112 total cores and 128GB HBM

- PVC - 20 quad-GPU "Ponte Vecchio" nodes, each having 4x Intel Data Center GPU Max 1550s, hosted by 2x Intel Xeon Platinum 8480+ "Sapphire Rapids" processors with 112 total cores and 512GB DDR5 RAM

Frontera is a much larger HPC cluster composed of 8,474 compute nodes, again of a few different types:

- CLX - 8,368 dual-processor Intel Xeon Platinum 8280 "Cascade Lake" nodes, each having 56 total cores and 192GB DDR4 RAM

- NVDIMM - 16 large-memory, quad-processor Intel Xeon Platinum 8280M "Cascade Lake" nodes, each with 112 total cores and 2.1 TB Intel Optane NVDIMM memory

- RTX - 90 GPU nodes, each featuring 4 NVIDIA Quadro RTX 5000 GPUs, along with 16 total Intel "Broadwell" CPU cores and 128GB DDR4 RAM

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)