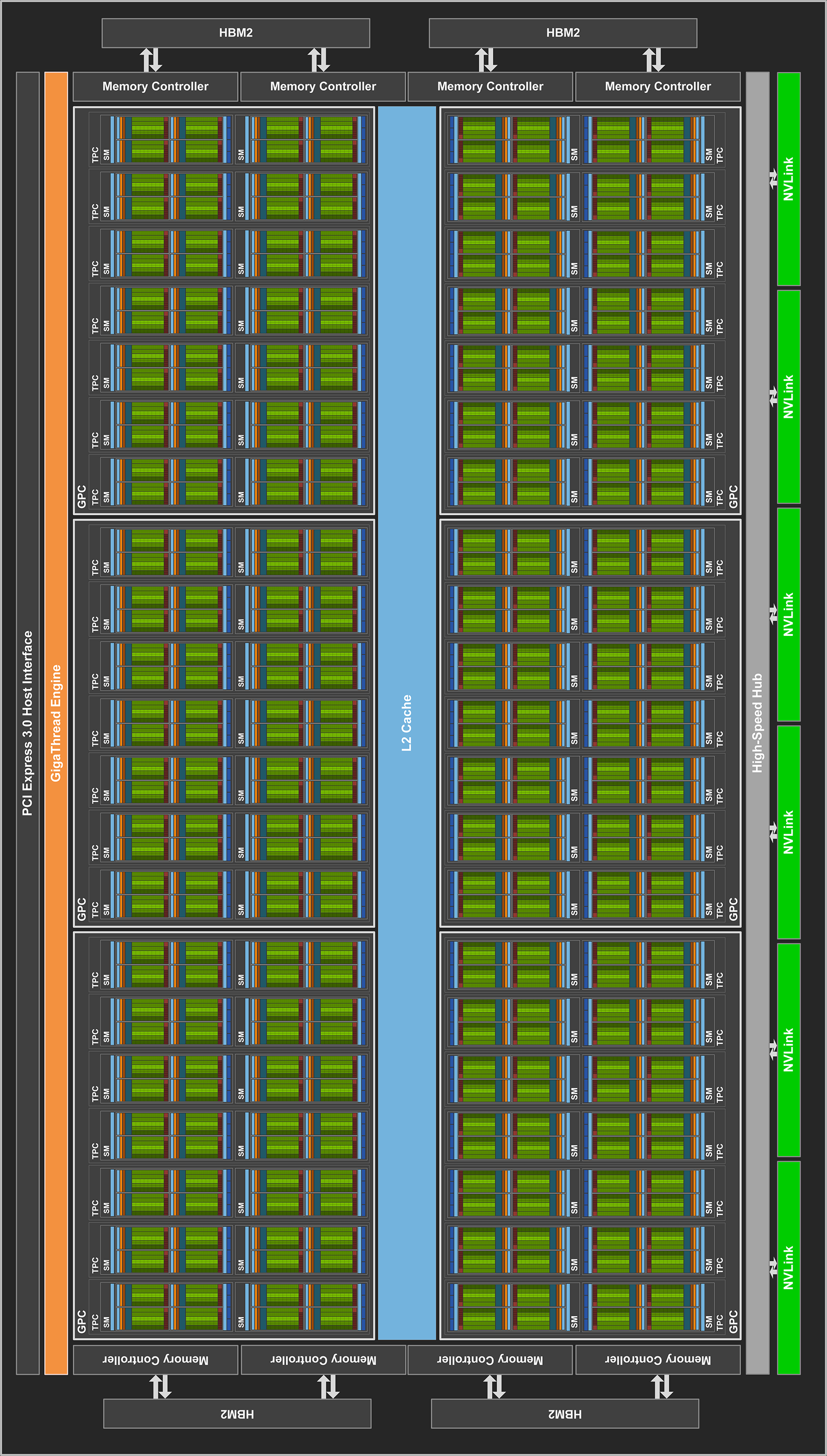

Volta Block Diagram

The NVIDIA Tesla V100 accelerator is built around the Volta GV100 GPU. This chip is designed to provide significant speedups to deep learning algorithms and frameworks, and to offer superior number-crunching power to HPC systems and applications.

As with NVIDIA's previous Pascal generation, the Volta GV100 GPU consists of many Streaming Multiprocessors (SMs) arranged into a loose hierarchy. The SMs are paired into Texture Processing Clusters (TPCs), which are in turn grouped into GPU Processing Clusters (GPCs). The GV100's overall design is depicted in the figure. In the ideal case, the chip is comprised of 84 Volta SMs, which are partitioned into 6 GPCs.

The higher-level organization into processing clusters is really only relevant to graphics applications. (But in case you were counting: in the full GV100 GPU shown above, each of the 6 GPCs contains exactly 7 TPCs, for a total of 6 x 7 x 2 = 84 SMs. However, we know that the actual GV100 GPU used in the Tesla V100 has only 80 SMs, implying that a couple of its GPCs must have 6 TPCs.)

The edges of the block diagram show the links to other system components. The longest bar represents the PCIe 3.0 link to the host. The green blocks on the opposite edge are the much faster NVLink 2.0 bridges leading to other NVIDIA devices, as well as to certain POWER9-based hosts, including the IBM AC922 servers in Longhorn (now decommissioned). On the other edges are the eight 512-bit memory controllers (4096 bits total) that connect the entire array of SMs to the device's HBM2 high bandwidth memory. The role of the central L2 cache memory will be described later.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)