NUMA Control in Code

- Within a code, Scheduling Affinity and Memory Policy can be examined and changed via APIs to Linux system routines

- These APIs let you set affinities and policies that differ per thread

-

Calling APIs from source code is not the only way to influence OpenMP threads:

- One alternative is to set OpenMP environment variables that affect thread placement,

OMP_PROC_BINDandOMP_PLACES - For codes compiled with Intel compilers, OpenMP threads are similarly influenced by the

KMP_AFFINITYenvironment variable - Effects of these environment variables on OpenMP codes are described later

- One alternative is to set OpenMP environment variables that affect thread placement,

-

To modify scheduling affinity via an API: sched_getaffinity, sched_setaffinity

#define _GNU_SOURCE#include <sched.h>- Automatically linked from libc

- Can confirm placement with sched_getcpu

-

To modify memory policy via an API: get_memorypolicy, set_memorypolicy

#include <numaif.h>- Link with

-lnuma

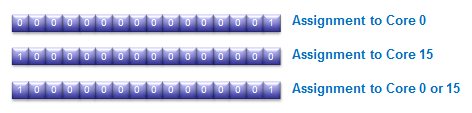

- To make scheduling assignments, set bits in a mask:

Complete code example for Scheduling Affinity:

Quick exercise:

- Copy and compile the above code (

icc -qopenmp schedaff.c -o schedaff). -

Set the number of threads with the

OMP_NUM_THREADSenvironment variable and run the code. - Confirm that each thread is assigned to the core with the matching id.

-

Add an OpenMP function call

nt = omp_get_num_threads()to the parallel section, then use private variablentto assign threads to cores in reverse order (nt-1-inum).

Tip: Intel offers its own API for setting thread affinity

Intel's API for setting thread affinity is described in the Low Level Affinity API subsection in the Intel C++ Compiler Classic Developer Guide and Reference. The Intel API appears similar to Linux system calls, except all routines have kmp_ at the beginning of their names.

Finally, control of memory policy in presence of different kinds of memory—for example, DRAM and MCDRAM—may warrant the use of the memkind library, originally developed by Intel.

- The memkind library is likely most useful in cases where compute nodes have special high-bandwidth memory, but any NUMA architecture may benefit.

- Manual mode of operation: call

hbw_malloc()instead ofmalloc()when wanting to allocate high-bandwidth memory. Calls to other functions in the hbwmalloc collection may also be made. Be sure to#include <hbwmalloc.h>. - Automatic mode of operation: use the autohbw library and related environment variables to specify the threshold for allocating high-bandwidth memory.

- More advanced cases can be covered as well. Information on the fully general API, for multiple types of memory, may be found in the memkind description.

©

|

Cornell University

|

Center for Advanced Computing

|

Copyright Statement

|

Access Statement

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)