Using numactl on Processes

How numactl command arguments impact processes

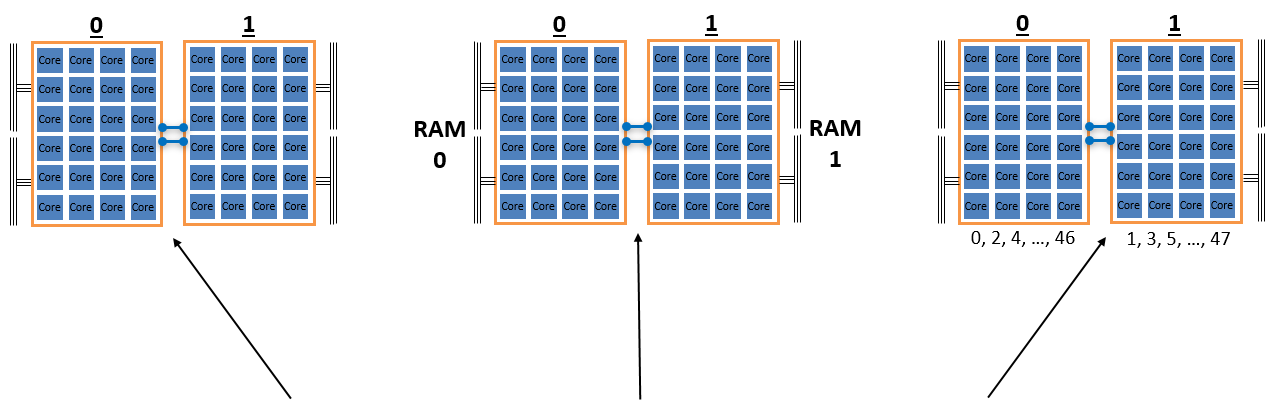

Consider the effects of the following command on a Stampede3 Skylake node with 48 cores:

numactl <option socket(s)/core(s)> ./a.out

| For a Process:

Socket Control |

For a Process's Memory:

Socket Control |

For a Process:

Core Control |

|

assignment to socket(s) -N

|

pattern of memory allocation (local, interleaved, pref., mandatory) -l, -i, --preferred, -m

|

assignment to core(s) -C

|

Quick guide to numactl

| Flag | Arguments | Action | |

|---|---|---|---|

| Socket Affinity | -N |

{0,1} | Execute process on cores of this (these) socket(s) only. |

| Memory Policy | -l |

no argument | Allocate on current socket; fallback to any other if full. |

| Memory Policy | -i |

{0,1} | Allocate round robin (interleave) on these sockets. No fallback. |

| Memory Policy | --preferred= |

{0,1} | Allocate on this socket; fallback to any other if full. |

| Memory Policy | -m |

{0,1} | Allocate only on this (these) socket(s). No fallback. |

| Core Affinity | -C |

{0,1,2,3,...47} | Execute process on this (these) core(s) only. |

| NUMA Information | --hardware |

no argument | Display detailed info about NUMA nodes and internode distances. |

Notes on terminology

So far, the discussion of NUMA has involved familiar hardware objects like sockets (or equivalently, processors) and cores. However, these terms can be generalized into broader concepts, namely, NUMA nodes and hardware threads. In typical x86-based processors like Intel Xeons, one often assumes that NUMA nodes are the same as sockets, and hardware threads are the same as cores. But for x86 processors built from multi-chip modules (e.g., Intel's "EMIB" technology), or for Xeons with hyperthreading enabled, these assumptions can break down.

In the more general conception of hardware that numactl works with, NUMA nodes are the predesignated pools of memory that are deemed to be

"closer" to one group of hardware threads than to another group. Hardware threads may also be referred to as "logical CPUs" or "virtual CPUs" (vCPUs).

Further details on numactl use can be found by typing man numactl on the system you are using. A summary of the NUMA nodes available

on a given machine, as well as other CPU-related info, can be obtained by running lscpu.

Specific considerations for memory usage and NUMA node configurations on TACC systems can be found in the respective user guides at the TACC documentation hub. More background on NUMA, specifically in NUMA in Linux, can be found in this ACM Queue article.

Historical note: in the past, Stampede2 used to offer multiple extra batch queues to provide optional NUMA-node configurations for its KNL nodes.

These Xeon Phi processors came with special onboard memory called MCDRAM. To identify the NUMA node

that corresponded to the high-bandwidth MCDRAM memory, one could issue a helpful command from the memkind library, memkind-hbw-nodes.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)