Classification

Scikit-learn (module name: sklearn) provides a wealth of functionality for carrying out a wide variety of machine learning tasks. Its documentation provides a great deal of background information on different machine learning methods, as well as their implementation in Python using scikit-learn. Scikit-learn provides routines for both supervised learning problems (such as classification and regression) and unsupervised learning problems (such as clustering, dimensionality reduction, and feature extraction). The map presented on Choosing the right estimator can be a useful place to start if you're not sure what type of machine learning problem you are working with.

In this section, we will examine the use of scikit-learn to build classifiers, that is, to perform classification of categorical labels as learned from a set of labeled training data. We will do so using the Twitter data that we have been working with, in order to assign tweets to different categories based upon their textual content. We encourage you to follow along in the associated Jupyter notebook so that you can see this process in greater detail.

For a supervised learning task, one must provide labeled data. In an image classification task, for example, one would provide labels about what type of object is represented in each image. The scikit-learn tutorial provides a nice example using the well-studied problem of digit recognition. For our tweet data, we do not have obvious categorical labels associated with the data. Therefore, we will leverage the capabilities of another package (Textblob) to perform sentiment analysis on tweet text, so that we may assign some labels to the data for further analysis. Admittedly, this workflow is a bit artificial, since we might just want to use Textblob directly to carry out these sorts of analyses rather than using Textblob as a tool for generating data labels for input to scikit-learn. In the associated Jupyter notebook, we first perform some text preparation and cleanup, which we will not reproduce here. In addition, since many tweets in our full dataset are retweets of the same original tweets, we filter the full datasets to drop these duplicates, resulting in a dataset with approximately 44000 tweets that we will examine in further detail.

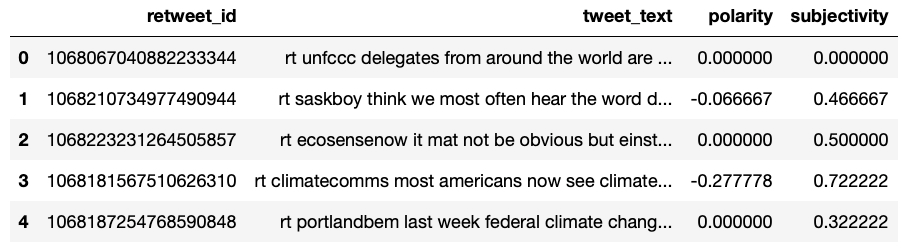

Textblob can produce quantitative estimates of textual sentiment ("positive" vs. "negative" sentiments) and textual subjectivity ("subjective" vs. "objective" statements). Polarity scores run continuously from -1 (negative sentiment) to +1 (positive), and subjectivity scores run from 0 (objective) to 1 (subjective). We have run our #climatechange tweet dataset through Textblob in order to associate a polarity and subjectivity score with each tweet in the dataset. Using somewhat arbitrary cutoffs, we can break these continuous scores into a small set of categories: polarity > 0.5 is assigned the label "positive", polarity < -0.3 is assigned the label "negative", and polarities between these two thresholds are assigned "neutral". Similarly, for subjectivity, subjectivity > 0.9 is assigned the label "subjective", subjectivity < 0.02 is labeled "objective", and those in between are labeled "neither". As part of our dataset preparation, we merge these scores and labels with information about the tweets, leading to a Pandas dataframe named dff that appears as such:

Our goal is to build a classifier that can predict these text labels (e.g., positive, negative, subjective, objective) based on tweet text, by training on a subset of tweets that have such labels assigned. Scikit-learn provides support for a wide range of different classification algorithms, of which we will consider only a few here. Since we are working with textual input, however, we must first transform the textual data to a numerical representation for use with scikit-learn classifiers. There are many different methods for carrying out such a transformation; in this case, we have used the CountVectorizer that is part of the sklearn.feature_extraction.text module. By producing a vectorizer object and fitting it to the tweet text, we can create a numerical feature array of shape (44127, 85), i.e., an 85-component feature vector for each of the 44127 tweets.

We'll start by building a linear classification based upon Logistic Regression (which, despite the presence of the word "regression", is actually a method for classification with categorical data, rather than regression with continuous data). This classifier is provided as part of the sklearn.linear_model module.

Scikit-learn provides a number of functions to facilitate the organization of data in machine learning analyses, which is required to train models, test predictions of models, and assess the performance of models. We provide a few examples here, first by splitting the full dataset into a static set of training and testing sets, and then by carrying out k-fold cross-validation, where a round-robin collection of test and train datasets are constructed to avoid overfitting of the model to a single static training set. We'll focus here on building a model of the polarity label data, i.e., associating polarity labels with tweet feature vectors.

Having split into training and testing sets, we can fit our logistic model to the training data, make predictions on the testing data, and assess the accuracy score of the predictions. For classification problems, the accuracy score reflects what fraction of the test data are accurately predicted by the model.

When we run this code (see the associated Jupyter notebook), we find that for this training set, the model achieves an accuracy of approximately 91%. In general, however, it is better to assess accuracy over a variety of training-testing splits, which is supported by the cross_validate function as shown below, using a 5-fold cross-validation strategy:

This function produces a dictionary containing various attributes of the fit, most notably the array of accuracy scores associated with each of the 5 decompositions into testing and training sets:

This just scratches the surface of what is possible with this dataset in scikit-learn. In the associated Jupyter notebook, you can explore the application of different types of classifiers, as well as the application to the subjectivity labels.

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)