AI in Science

While AI is mostly receiving a lot of public attention due to the availability of applications that can generate text, images, and other content, the underlying suite of tools and techniques from machine learning (ML) and deep learning (DL) are also increasingly being integrated into the fabric of scientific research in a number of interesting and innovative ways. This includes the use of specific ML and DL techniques to address important problems in science and engineering, as well as broader methodological endeavors that are subsumed under terms such as "Scientific Machine Learning", "Scientific AI", and "AI for Science".

Many uses of machine learning in the physical sciences were summarized in a comprehensive 2019 review article. A more recent review article summarized many of the important trends concerning scientific discovery in the age of artificial intelligence. This involves not just using these tools to analyze data and make predictive models (which we will discuss more on the following pages), but also integrating such tools into the fabric of the scientific research process, broadly construed. That second review emphasized the following elements:

- AI-aided data collection and curation for scientific research

- Data selection

- Data annotation

- Data generation

- Data refinements

- Learning meaningful representations of scientific data

- Geometric priors

- Geometric deep learning

- Self-supervised learning

- Language modelling

- Transformer architectures

- Neural operators

- AI-based generation of scientific hypotheses

- Black-box predictors of scientific hypotheses

- Navigating combinatorial hypothesis spaces

- Optimizing differentiable hypothesis spaces

- AI-driven experimentation and simulation

- Efficient evaluation of scientific hypotheses

- Deducing observables from hypotheses using simulations

Continued integration of AI into the scientific process will surely continue. In many cases, insights and techniques from one problem domain can be productively recast for use in another domains. For example, researchers have been able to leverage techniques from Natural Language Processing (NLP) to build protein language models and chemical language models.

When a protein chain folds into a 3D structure, interactions and associations are introduced between amino acids that are potentially separated by large distances in the linear protein chain. This is reminiscent of the way that long-range associations develop between words in natural language, and how the self-attention mechanisms introduced in Transformer architectures can learn and capture those long-range associations. And in fact, Transformers trained on large databases of protein sequences are able to learn those associations as part of protein structure prediction.

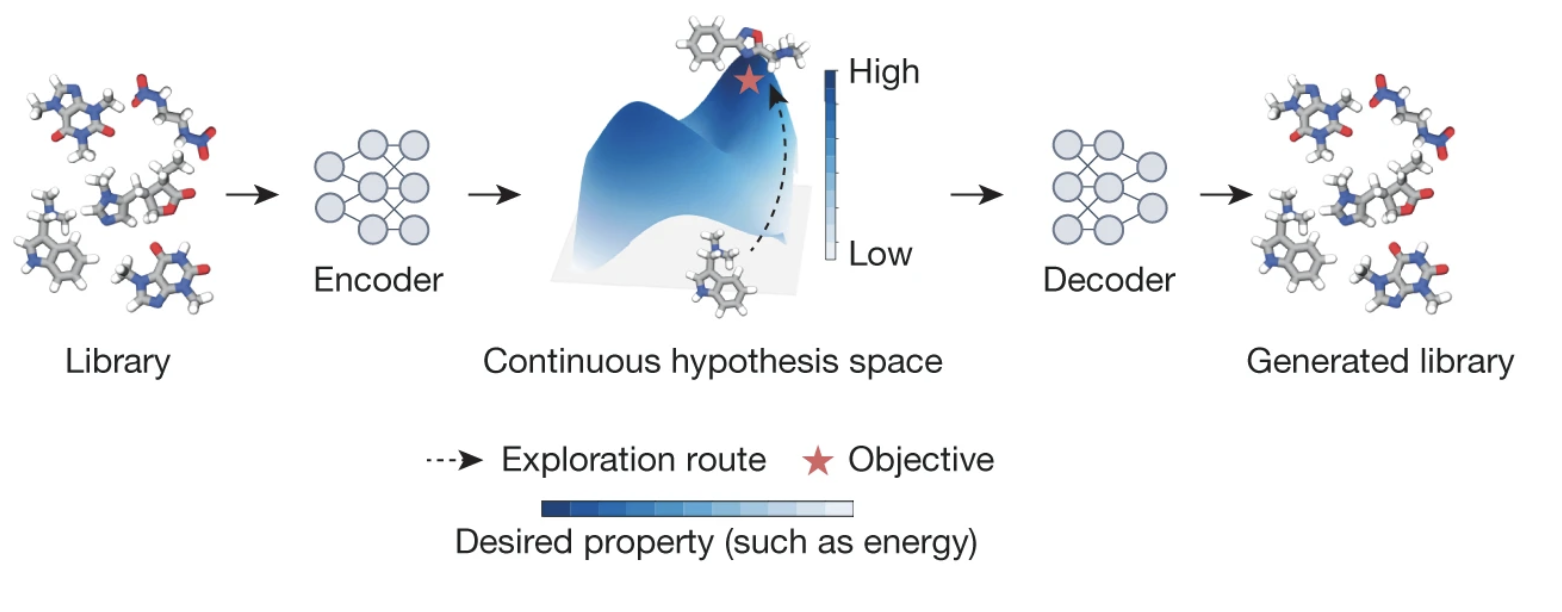

Similarly, there is great interest in generating novel candidate small molecule compounds for potential use as new drugs in medical therapies, and in being able to computationally predict the properties of compounds without needing to experimentally test every possible candidate. Chemical language models are used as part of such a process, using an underlying "language" of chemical structure such as the SMILES or Canoncial SMILES formats. Those languages encode not just the atomic components of a molecular compound, but also the chemical bond structure connecting those atoms. The review article linked above on scientific discovery in the age of artificial intelligence briefly describes how such approaches are used in conjunction with encoder-decoder architectures to generate candidate molecular compounds, as summarized in the figure below.

Image credit: Wang et al., "Scientific discovery in the age of artificial intelligence", https://www.nature.com/articles/s41586-023-06221-2

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)