AI as a Complex System

While AI frameworks, and the machine learning and deep learning systems that they are built from, are enabling new kinds of science, they are simultaneously and importantly the objects of scientific study.

The field of physics has long been interested in the emergence of collective behaviors in complex systems with many interacting components. These collective behaviors include phenomena such as symmetry breaking, ordering, synchronization, pattern formation, self-organization, self-similarity, criticality, universality, and phase transitions. The 2024 Nobel Prize in Physics was awarded to John Hopfield and Geoffrey Hinton "for foundational discoveries and inventions that enable machine learning with artificial neural networks". That award acknowledges not just the application of artificial neural networks across a variety of fields, but also the intrinsic value of investigating the structure and function of complex neural networks to better understand how they learn and what they learn. Hopfield's work on neural networks was inspired in part by prior research on spin glasses, a class of disordered magnetic systems that are known to have rugged energy landscapes with many locally stable minima, which are associated with subtly different system configurations. Hopfield reasoned that similar sorts of local minima could be exploited in a system comprised of interconnected neurons in order to store memories of previously observed images, a process that we might now refer to as training a classifier. The figure below from the Royal Swedish Academy of Sciences summarizes this process graphically.

Image credit: Johan Jarnestad / The Royal Swedish Academy of Sciences, https://www.nobelprize.org/prizes/physics/2024/press-release/

Modern deep learning models used in AI are vastly larger than the small associative memories that Hopfield built, and therefore much more uninterpretable as well. Much ongoing research is involved in probing large neural network models, with a goal of peeling back the cloak of noninterpretability. In addition, companies developing AI technologies are working to design and build sufficient guardrails that can corral LLMs and other generative models, so that they may be safely deployed in public.

At the intersection of AI and Complex Systems, where the mantra of "More is Different" wanders into new and curious landscapes, researchers are endeavoring to address questions such as:

- what features in data are AI systems learning, and how those are encoded in models?

- how do the capabilities of AI systems scale with the size of models and training data?

- how are models able to generalize when they are trained in such ridiculously large parameter spaces?

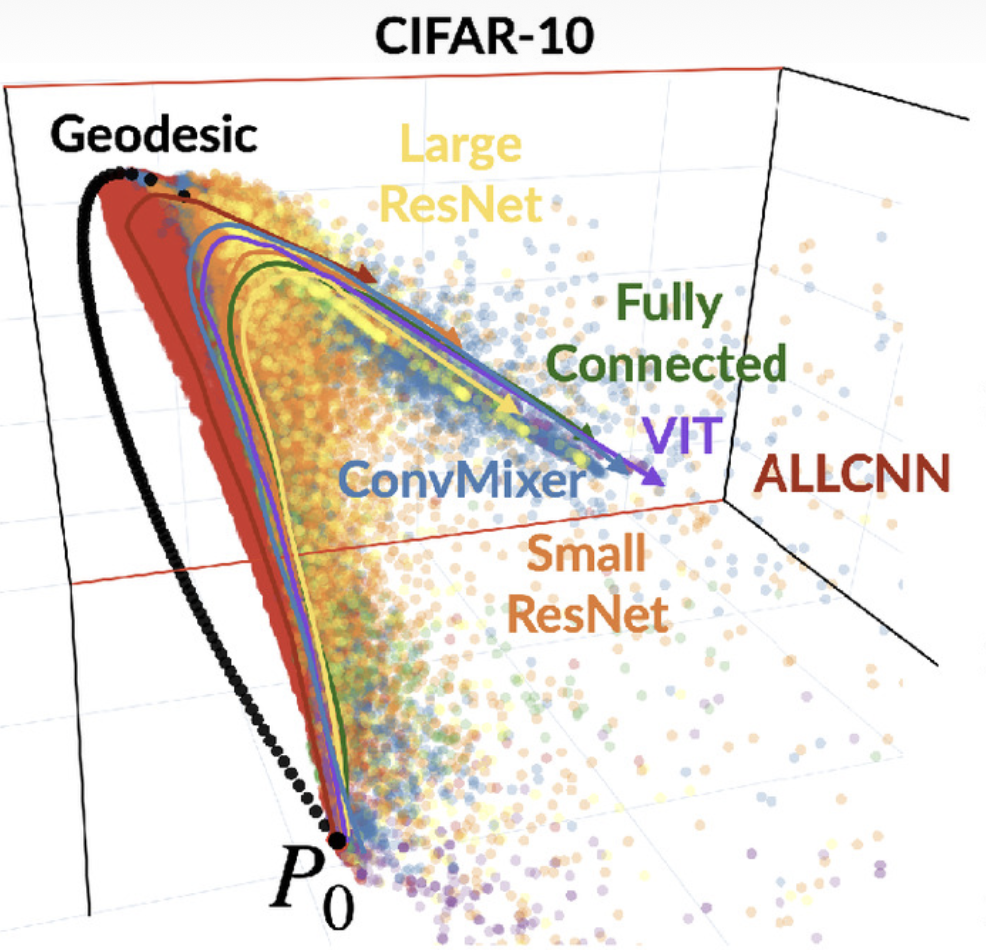

As an example of this sort of work, recent research has combined large-scale data analysis with sophisticated mathematical methods to investigate how large neural networks learn during training, concluding that the training process of many deep networks explores the same low-dimensional manifold, as summarized in the figure below.

Image credit: Mao et al., https://www.pnas.org/doi/10.1073/pnas.2310002121

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)