Deep Learning and Computational Hardware

While much of the current buzz about AI focuses on the end-user capabilities of AI applications and LLMs, there is also a lot of discussion about the massive amount of computing resources needed to build, train, and run large generative AI models. Enormous data centers are being built by companies seeking to gain prominence in the field, snatching up or building as many GPUs and other specialized chips as they can get their hands on. High-end supercomputer clusters are increasingly outfitted with specialized hardware to support AI workloads. What's this all about?

Hardware acceleration

Deep learning methods can be executed on any general purpose computer, but they are particularly well-suited to the hardware acceleration that is provided by graphics processing units (GPUs), as well as related processors targeted specifically for deep learning. This is because the computational action of neural networks — multiplying node outputs by edge weights and adding biases or offsets, either in forward or backward propagation through the network — are linear algebra operations that can be parallelized over many data streams in a large network. Fundamentally, these kinds of operations are what GPUs were originally built to support, albeit in the context of processing graphics on a computer display.

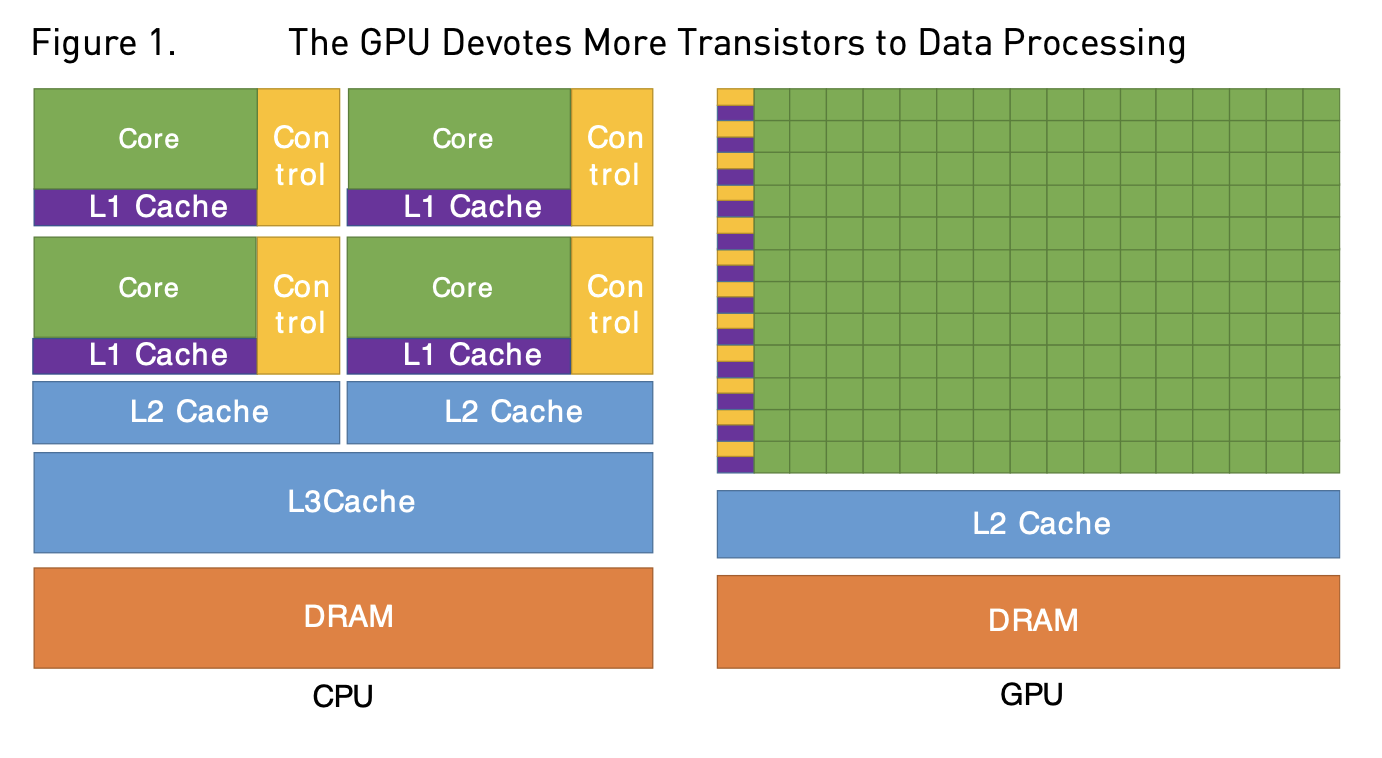

Compared to central processing units (CPUs), GPUs have many more processing units and higher memory bandwidth, while CPUs have more complex instruction sets and faster clock speeds. These issues are discussed in our companion material on GPU architectures — see the section on GPU characteristics for more detail on the differences between GPUs and CPUs. Since the training phase of machine learning can involve many iterated forward and backward passes through the network in order to fit a large number of training samples, GPUs can be especially important for training models on large amounts of data.

Image credit: NVIDIA CUDA C++ Programming Guide, https://docs.nvidia.com/cuda/archive/11.2.0/pdf/CUDA_C_Programming_Guide.pdf

Once trained, a deep learning model for making predictions from new data can potentially be run on much less powerful hardware (including, say, a mobile phone). That being said, as LLMs are tasked with producing large amounts of generative content, the inference or prediction steps that follow model training are increasingly also needing to run on large machines with substantial GPU resources.

GPUs are one example of hardware accelerators, which can be used by CPUs to offload parts of a computation that an accelerator is effective at handling, typically by exploiting processor parallelism. CPUs have long been accompanied by vector units that support vectorization of particular sorts of mathematical operations. The recognition that GPUs can provide massive speedups to deep learning computations has spurred the growth of companies such as NVIDIA, which produce such chips, as well as the development of other specialized hardware for deep learning computations, such as Tensor Processing Units (TPUs). Whereas modern CPUs generally have a capacity to represent double-precision numbers comprised of 64 bits, it has been recognized that many of the operations being carried out by GPUs and other accelerators for deep learning do not need to be done with that much precision, leading to the development of lower-precision chips that can process data more rapidly and use less power in the process.

The confluence of AI and HPC

High Performance Computing (HPC) is an arena that combines expertise and techniques from programming, algorithms, hardware design, software design, and multiprocessing in order to bring together substantial computational resources to solve large problems. Historically, use of HPC resources has been dominated by simulation or analysis of complex scientific applications, arising in fields such as physics, chemistry, astronomy, engineering, and biology. In such problem domains, much expertise has been collectively developed to support parallelization and multiprocessing of applications, management of large datasets, and coordination of complex workflows. Although large AI/ML/DL applications have emerged in part from other parts of the scientific landscape, those applications face many of the same challenges that have historically concerned researchers in the field of HPC. Furthermore, high-end HPC centers (i.e., facilities not just dedicated to training large AI models) need to figure out how to best configure computational resources to support a broad and general mix of computational use cases.

While deep learning computations have driven much of the demand for access to GPUs and other accelerators, it should be noted that many other types of computations can make effective use of the parallelism provided by those processors, and there is active work in developing software frameworks and interfaces that enable computer programmers and computational scientists to leverage those resources as part of larger research computing pipelines.

For an insightful and expert perspective on this confluence of AI and HPC, and on the manner in which the two fields drive each other, readers are encouraged to listen to this podcast with Dan Stanzione, the Executive Director of the Texas Advanced Computing Center (TACC).

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)