Revisiting Foundation Models

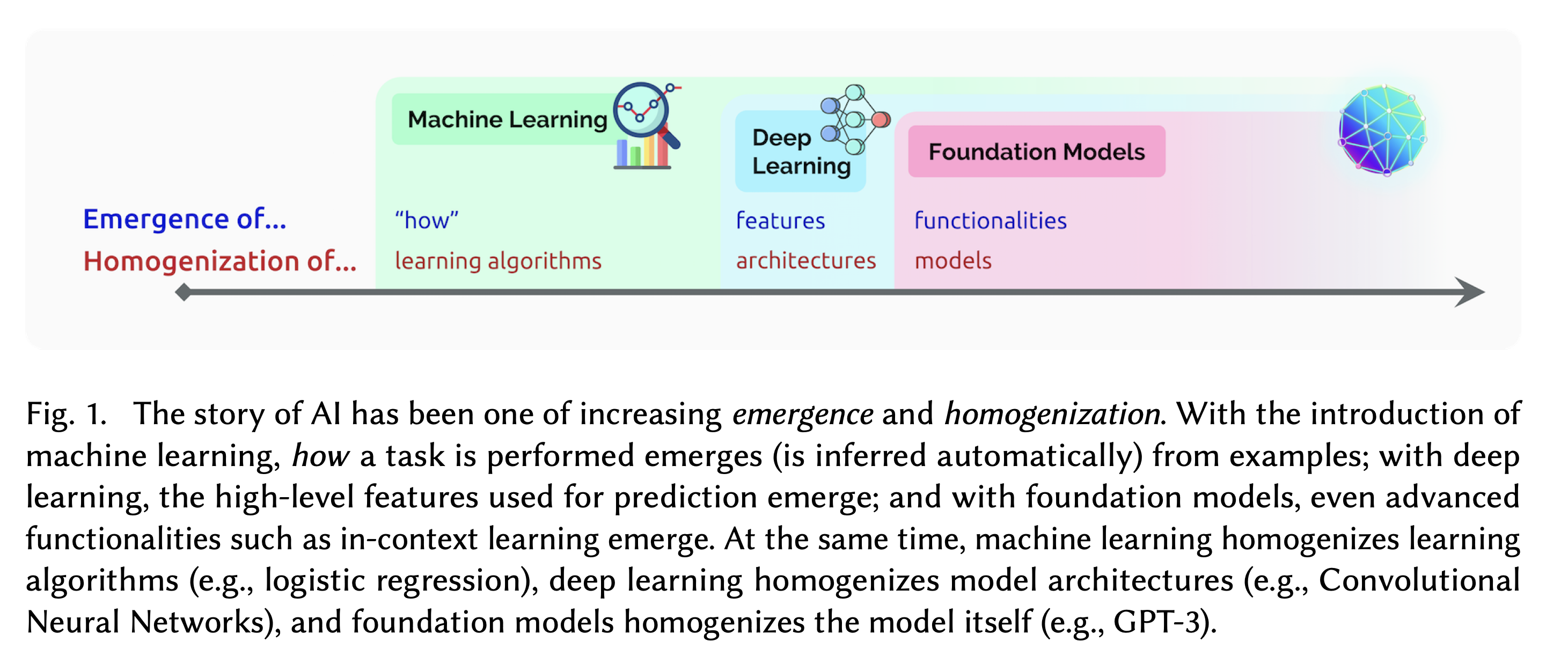

The term "Foundation Model" was coined in an influential 2021 paper, "On the Opportunities and Risks of Foundation Models". One of the overarching points that the authors of that paper chose to emphasize about AI is summarized in the figure below, which comprises Fig. 1 in the paper, along with its figure caption.

As stated in the caption, the authors note that "The story of AI has been one of increasing

Image credit: Bommasani et al., "On the Opportunities and Risks of Foundation Models", https://arxiv.org/abs/2108.07258

As Foundation Models have become key components in the AI ecosystem, they have also become a substrate upon which researchers can build more complicated AI pipelines by bringing together different models that can serve different roles. Models and components are mixed and matched in various ways to support different types of approaches or modalities. Some of these broader modalities that rely on the action of Foundation Models include:

- Mixture of Experts (MoE) models that integrate outputs from multiple models, each trained in a different sub-area

- Teacher-student models that enable a less capable student model to learn from the feedback and direction of a more capable teacher

- Knowledge distillation used in teacher-student and other contexts, where key lessons from an expert model are distilled for use by simpler models

- Reasoning models that assess their own output and think twice (or more) before blurting out an answer

- AI agents that empower a little bot to talk to a bigger set of models, in order to work on one's behalf

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)