Multi-Layer Perceptron (MLP)

What is Perceptron?

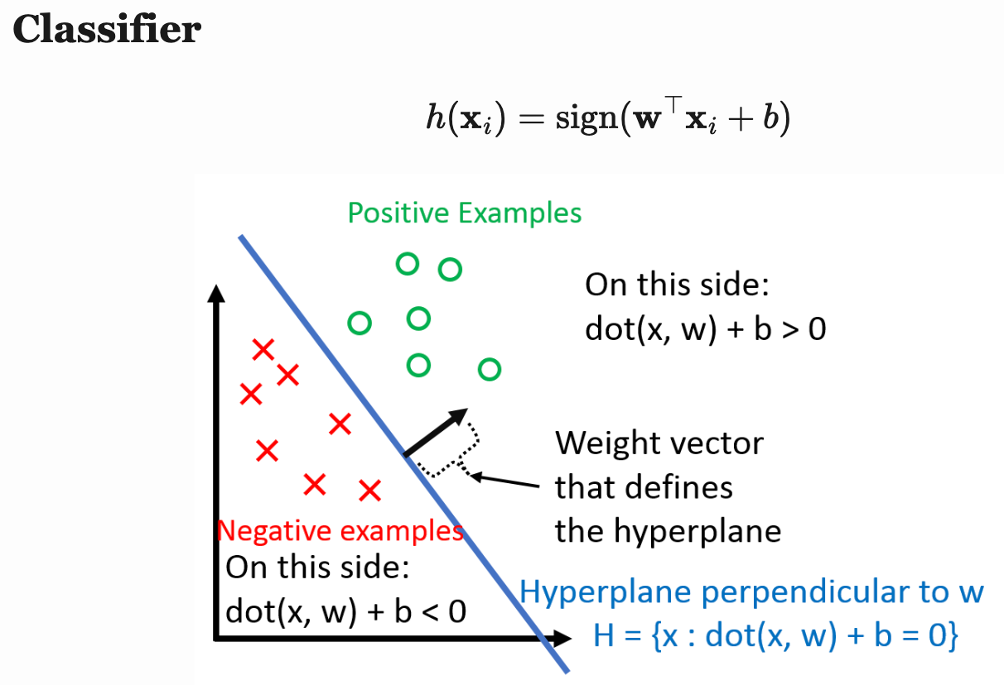

Before we learn about the multi-layer perceptron (MLP), we need to dive first into perceptron, since MLP originates from the concept of perceptron. The assumption behind the perception is that our dataset is linearly separable (i.e. there exists a hyperplane that can perfectly divide the dataset into two smaller groups). Suppose our dataset has two classes with labels +1 and -1, then a perceptron can find such a hyperplane that separates the two classes in a finite number of steps. If the assumption is not held, a perceptron will fail. The perceptron classifier defines the hyperplane that separates the two classes as

Positive examples are defined as the examples with

and negative examples are defined as examples with

Image source: [1]

[1] Weinberger. URL: https://www.cs.cornell.edu/courses/cs4780/2023fa/lectures/lecturenote03.html

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)