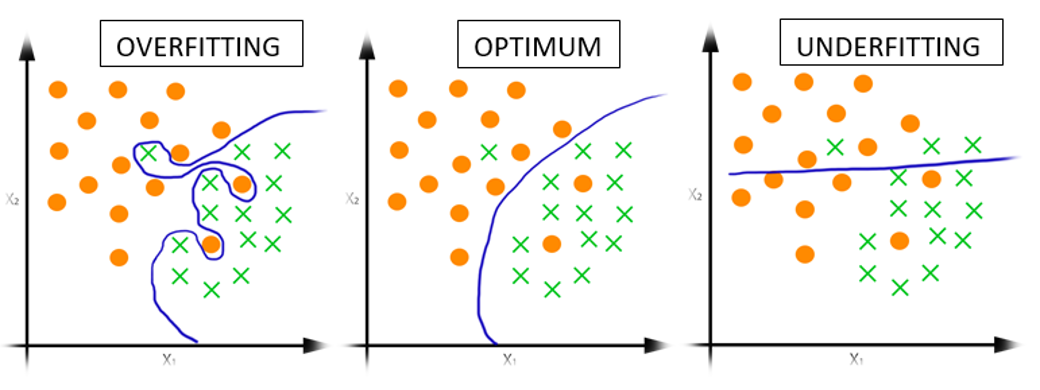

Underfitting vs. Overfitting

Underfitting happens when a model cannot reflect all the relations inherent in the training data, leading to a model that performs poorly on training and testing data. In other words, the model “failed to learn”. We can add more complexity to the model to solve the problem of underfitting. Possible solutions include adding more features, using a more complex model, boosting, etc.

Overfitting happens when a model has more parameters that can be justified by the data. It leads to poor generalization of new testing data. A sign of overfitting is that the model performs very well on training data but poorly on testing data. Possible solutions include using a less complex model, more data, bagging, etc.

Image source: [1]

References:

©

Chishiki-AI

|

Cornell University

|

Center for Advanced Computing

|

Copyright Statement

|

Access Statement

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)