Transfer Learning

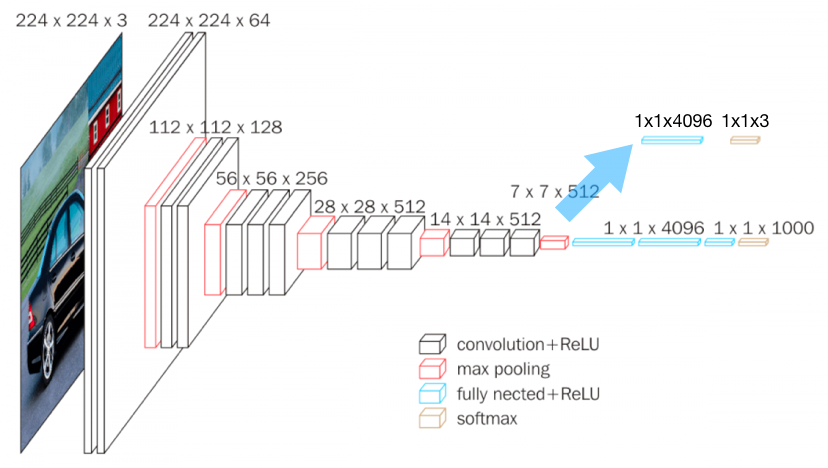

Usually, the larger the model is, the better performance it can offer, if it does not overfit. To train a large model while not causing overfitting, we need a large dataset. However, for specific tasks, like natural hazard detection, there might not be enough images, so training a large model with such a small dataset will cause overfitting. A workaround is to use transfer learning. We can use a large model, usually already trained on a large dataset for another task, and train it again on our small dataset. To adapt a large model to our small dataset with fewer numbers of classes, modifications on the “classification” block are usually needed.

Image source: [1]

[1] Has. URL: https://neurohive.io/en/popular-networks/vgg16/

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)