Residual Connection

Deep CNN models often have worse performance. This is not caused by overfitting, and adding more layers can lead to higher training errors. One explanation is that during the training process, gradient vanishing happens in some layers, causing all its preceding layers to fail to learn.

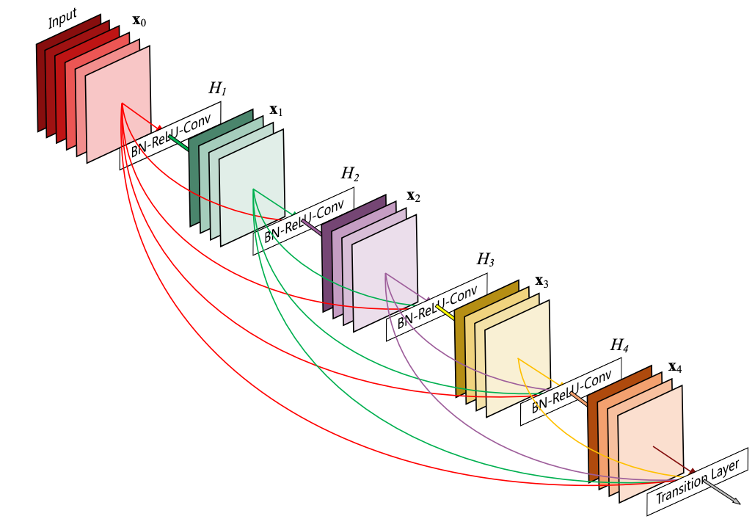

Residual connection can help reduce model complexity. DenseNet, in which all the layers are connected through the residual signal, can achieve the same prediction accuracy with fewer model parameters.

References:

[1] HLVDMW17. Gao Huang, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q Weinberger. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 4700–4708. 2017.

©

Chishiki-AI

|

Cornell University

|

Center for Advanced Computing

|

Copyright Statement

|

Access Statement

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)

CVW material development is supported by NSF OAC awards 1854828, 2321040, 2323116 (UT Austin) and 2005506 (Indiana University)